Microsoft today claimed that Phi-3, the third version of the Phi Small Language Model (SLM) family, is the “most capable and cost-effective small language model (SLM) ever released.” A few larger ones.

A Small Language Model (SLM) is a type of AI model designed to be highly efficient at performing specific language-related tasks. Unlike large language models (LLMs), which are suitable for a wide range of general tasks, SLMs are built on smaller data sets, making them more efficient and cost-effective for specific use cases.

Phi-3 comes in several versions, the smallest version is Phi-3 Mini, a 3.8 billion parameter model trained with 3.3 trillion tokens. Despite its relatively small size (Llama-3’s corpus weighs over 15 trillion data tokens), Phi-3 Mini can still handle 128,000 context tokens. It is similar to GPT-4 and surpasses Llama-3 and Mistral Large in terms of token capacity.

This means that giant AIs like Meta.ai’s Llama-3 and Mistral Large may collapse after a long chat or prompt you long before these lightweight models struggle.

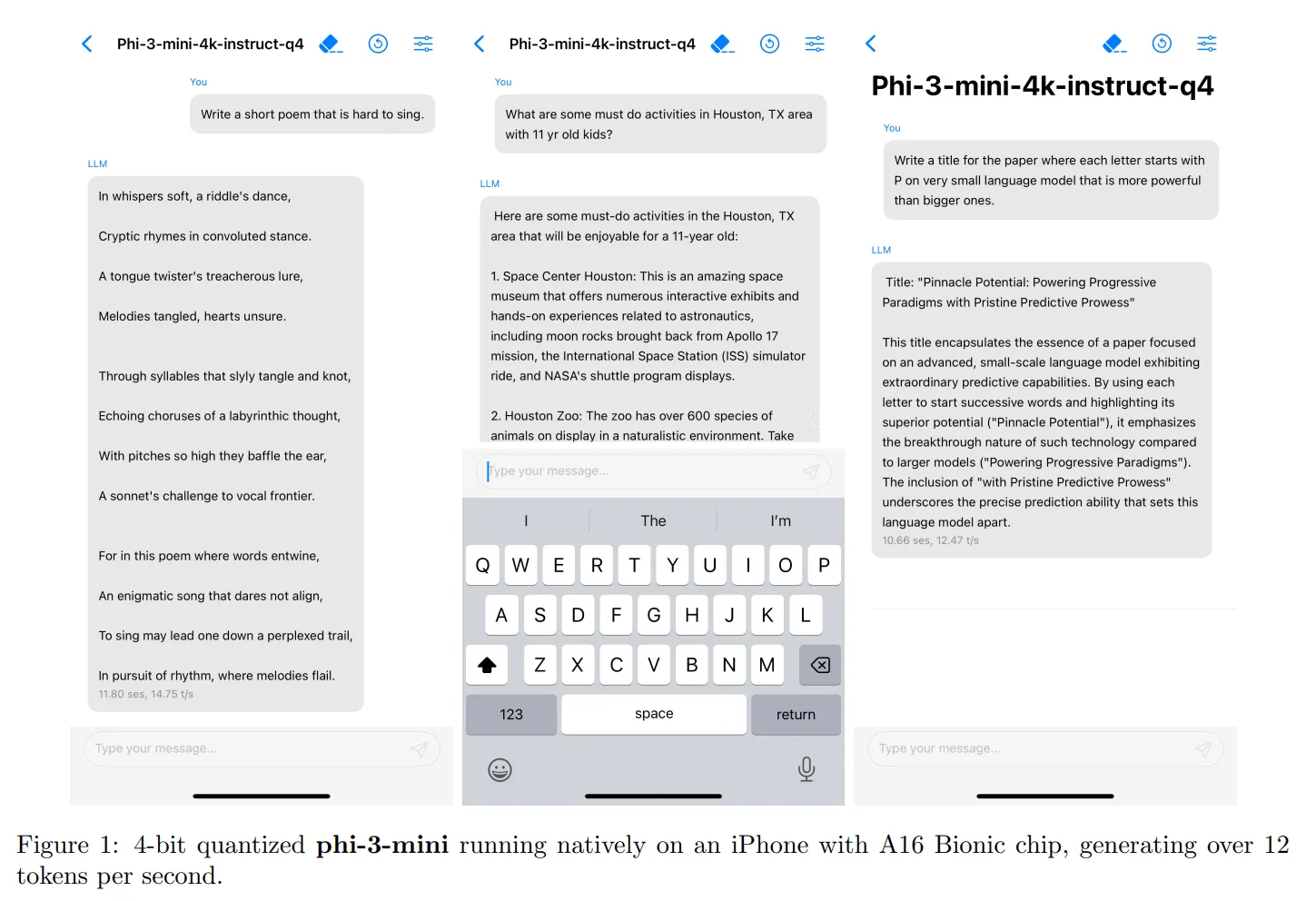

One of the most important advantages of the Phi-3 Mini is that it can be mounted and run on any ordinary smartphone. Microsoft tested this model on an iPhone 14 and it ran without issues, generating 14 tokens per second. The Phi-3 Mini requires only 1.8GB of VRAM to run, making it a lightweight and efficient alternative for users with more intensive needs.

The Phi-3 Mini may not be suitable for advanced coders or those with extensive needs, but it can be an effective alternative for those with specific needs. For example, startups that need chatbots or people leveraging their LLM for data analytics can use the Phi-3 Mini for tasks like cleaning data, extracting information, performing mathematical inferences, and building agents. When models are provided with Internet access, they can become very powerful by compensating for their lack of functionality with real-time information.

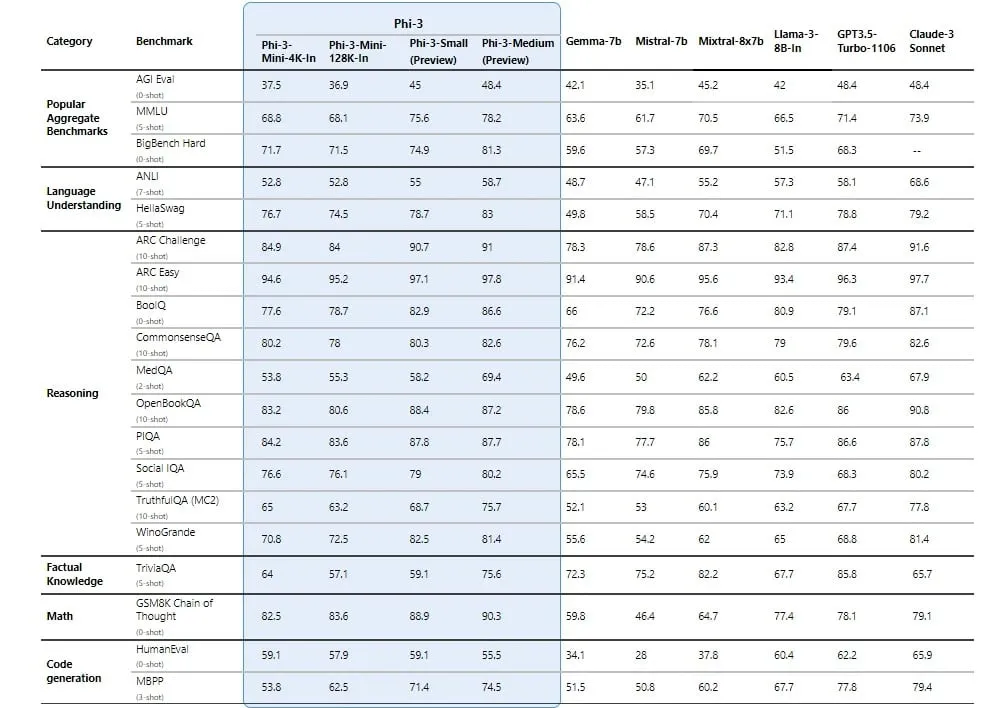

The Phi-3 Mini achieved high test scores due to Microsoft’s focus on managing data sets with the most useful information possible. In fact, the wider Phi family is not suitable for tasks requiring factual knowledge, but its high reasoning skills give it an edge over its main competitors. Phi-3 Medium (14 billion parameter model) consistently outperforms powerful LLMs such as GPT-3.5 (the LLM that supports the free version of ChatGPT), and the Mini version outperforms powerful models such as Mixtral-8x7B on most synthetic benchmarks. It surpasses.

However, it is worth noting that Phi-3 is not open source like its predecessor, Phi-2. Instead, it is open modelThis means it is accessible and usable, but it doesn’t have the same open source license as Phi-2, allowing for wider use and commercial applications.

In the coming weeks, Microsoft says it will release more models in the Phi-3 family, including the Phi-3 Small (7 billion parameters) and the aforementioned Phi-3 Medium.

Microsoft has made the Phi-3 Mini available in Azure AI Studio, Hugging Face, and Ollama. This model has instructions adapted and optimized for the ONNX runtime with Windows DirectML support as well as cross-platform support across a variety of GPUs, CPUs, and mobile hardware.