It can be easy and even comforting to imagine that using AI tools involves interacting with a purely objective, stoic, and independent machine that knows nothing about you. But when you factor in cookies, device identifiers, login and account requirements, and even the occasional human reviewer, the voracious appetite that online services have for your data seems insatiable.

Privacy is a key concern for both consumers and governments regarding the widespread deployment of AI. Overall, the platform emphasizes privacy features, even if they are hard to find. (Paid and business plans often exclude training on submitted data entirely.) But it can still feel intrusive every time the chatbot “remembers” something.

This article explains how to strengthen your AI privacy settings by deleting old chats and conversations and turning off settings for ChatGPT, Gemini (formerly Bard), Claude, Copilot, and Meta AI, which allows developers to train their systems. Your data. These instructions apply to desktop and browser-based interfaces respectively.

ChatGPT

OpenAI’s ChatGPT, still a flagship product of the generative AI movement, has several features that improve privacy and alleviate concerns about user prompts used to train chatbots.

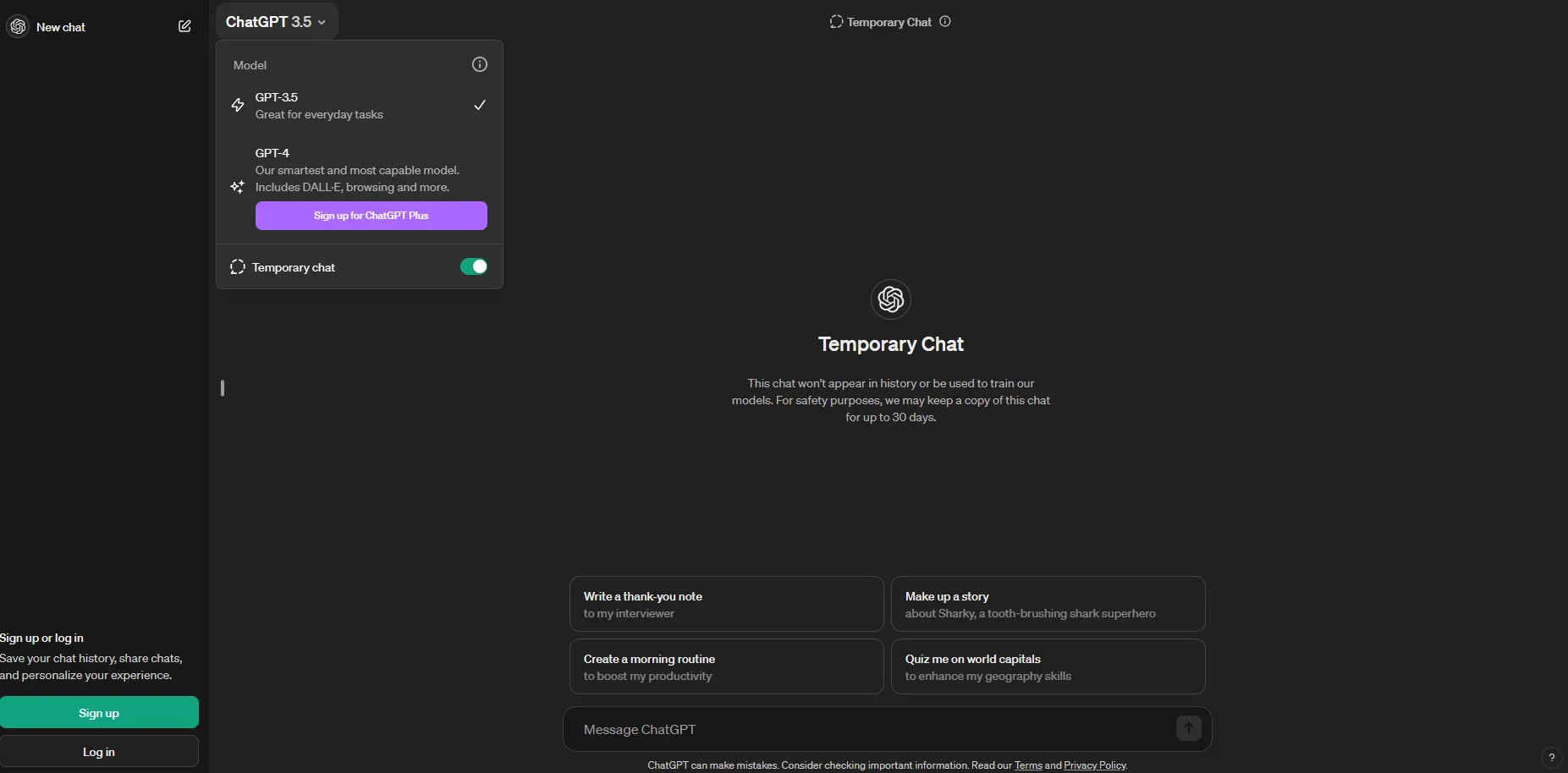

Last April, OpenAI announced that ChatGPT could be used without an account. By default, messages shared through the free, no-account version are not saved. However, if you do not want your users to use chat for ChatGPT training, they will still need to toggle the ‘Ad hoc chat’ setting in the ChatGPT drop-down menu at the top left of the screen.

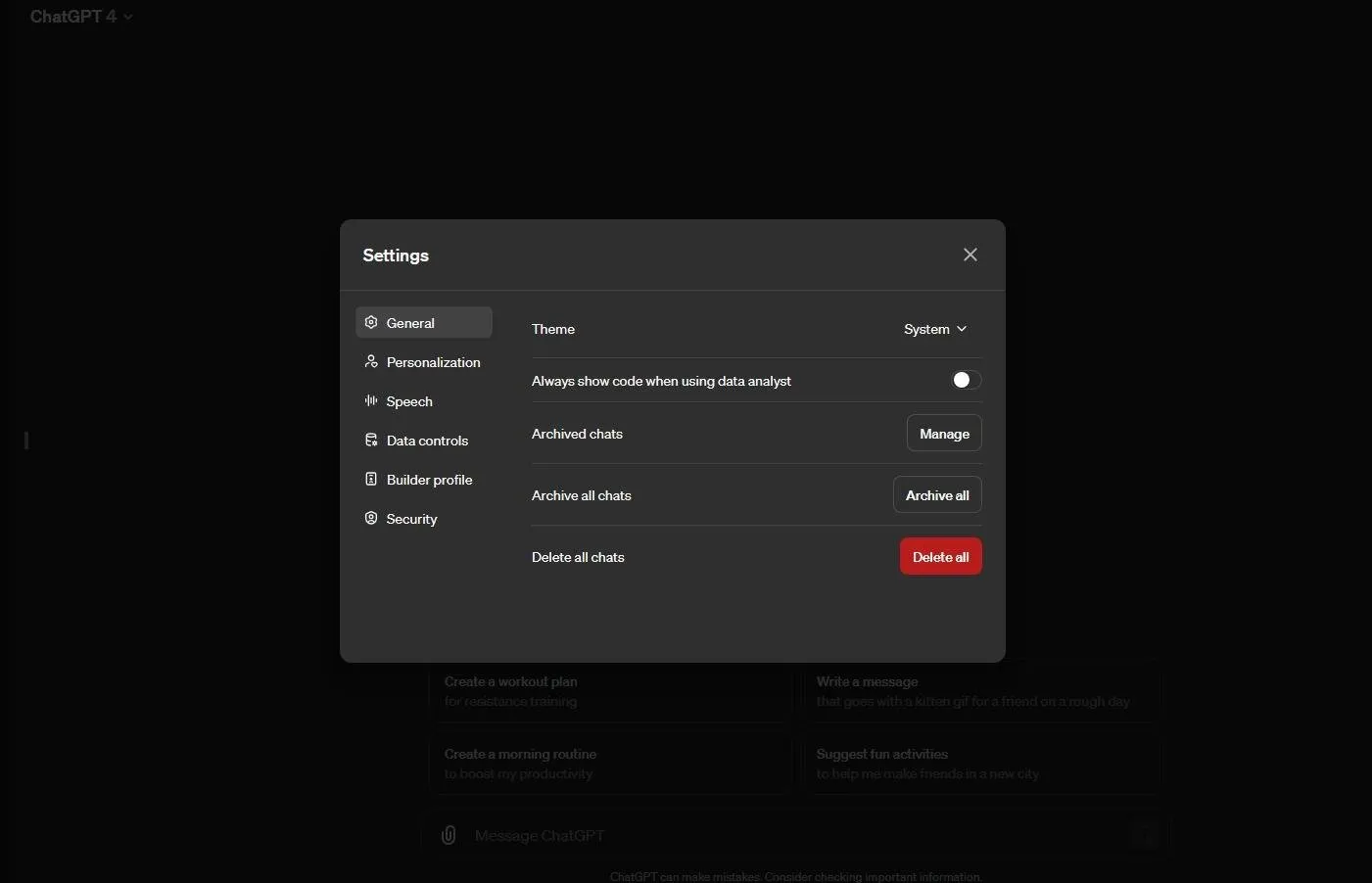

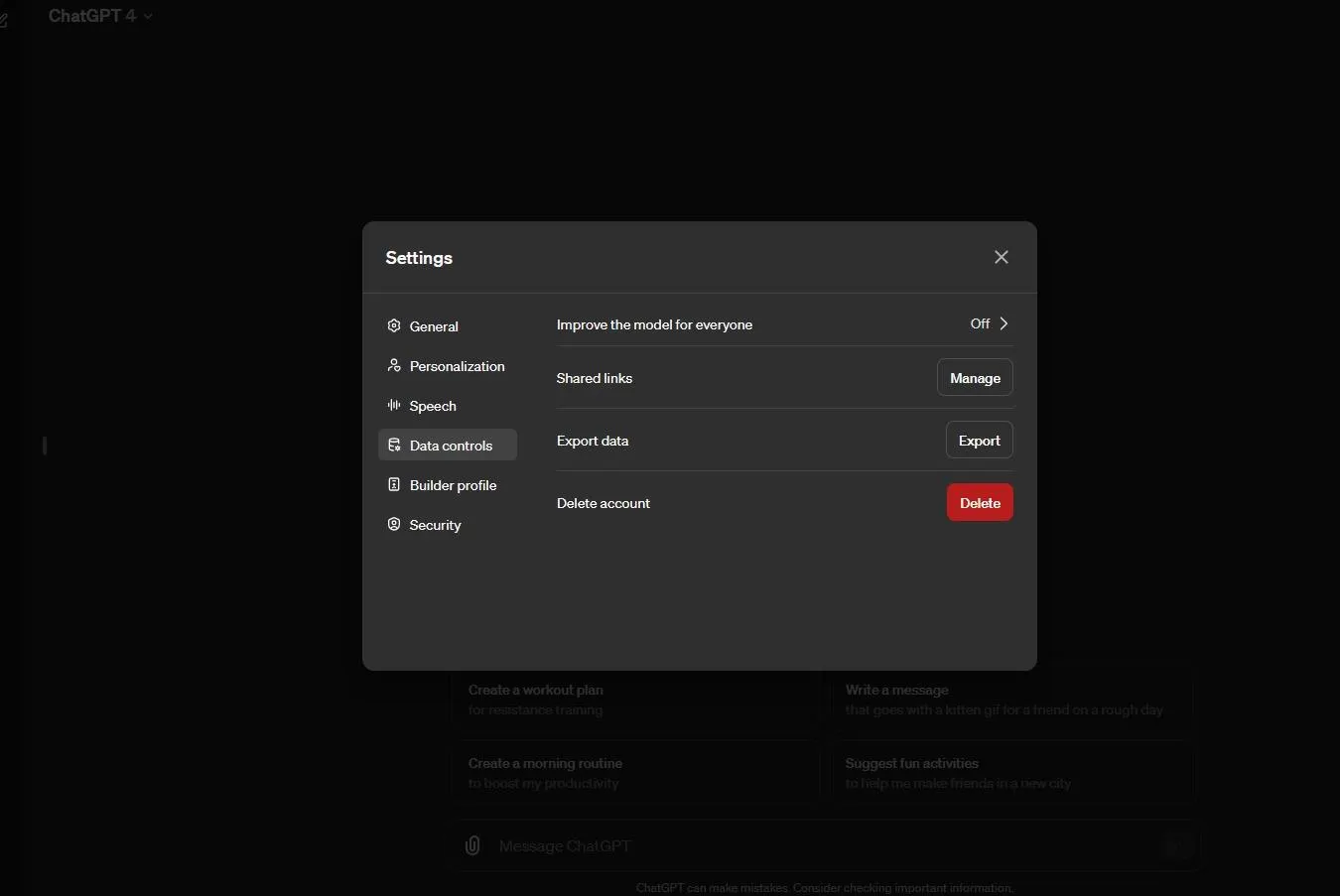

But how do I disable prompts if I have an account and subscription to ChatGPT Plus? GPT-4 gives users the ability to delete all chats in general settings. Again, if you don’t want chat to be used to train your AI model, look under “Data Controls” at the bottom and click the arrow to the right of “Better models for everyone.”

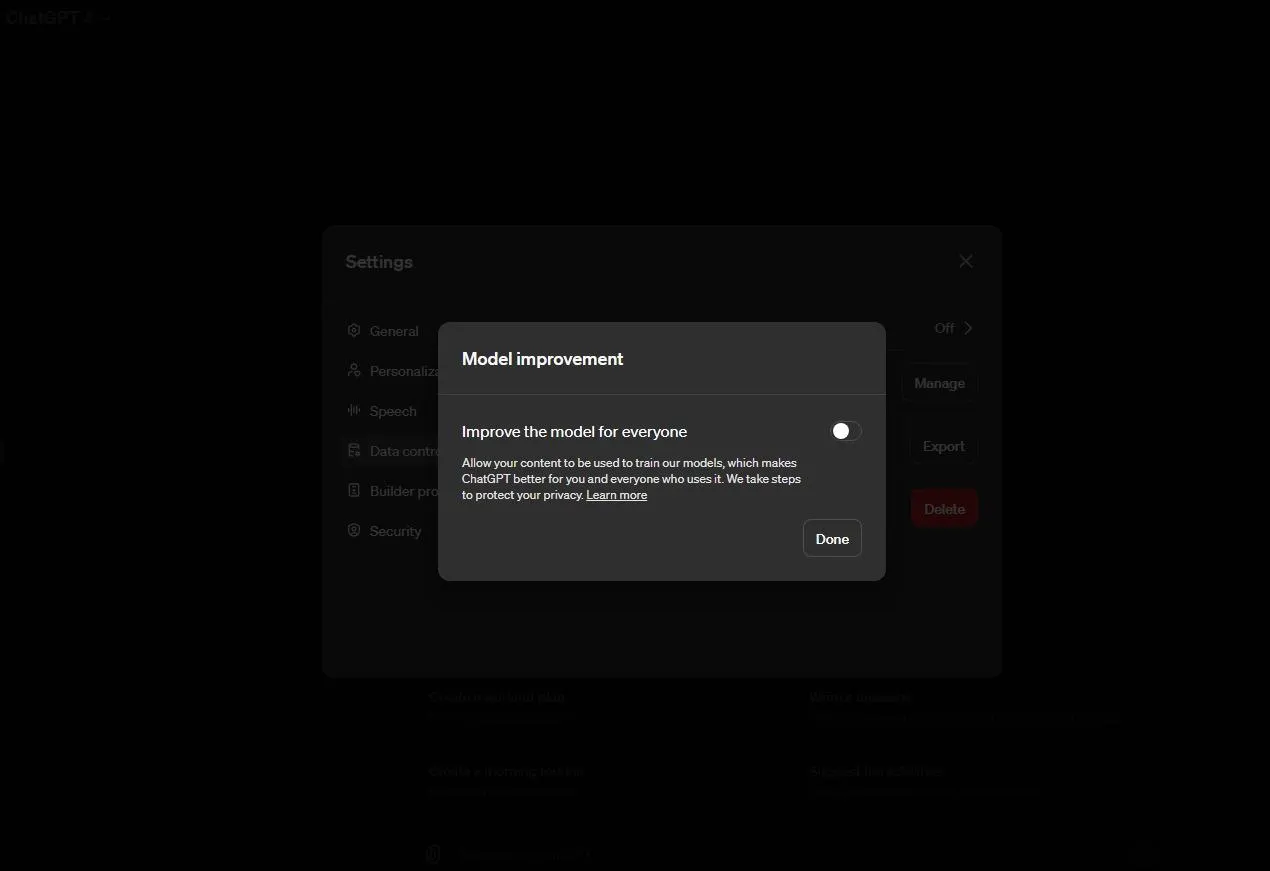

A separate ‘Improve Model’ section will appear, so you can turn it off and select ‘Done’. This will remove the OpenAI functionality that uses chat to train ChatGPT.

However, there are still caveats.

“While history is disabled, new conversations will not be used to train and improve models and will not appear in the history sidebar,” an OpenAI spokesperson said. decryption. “To monitor for abuse and review only when necessary, we retain all conversations for 30 days and then permanently delete them.”

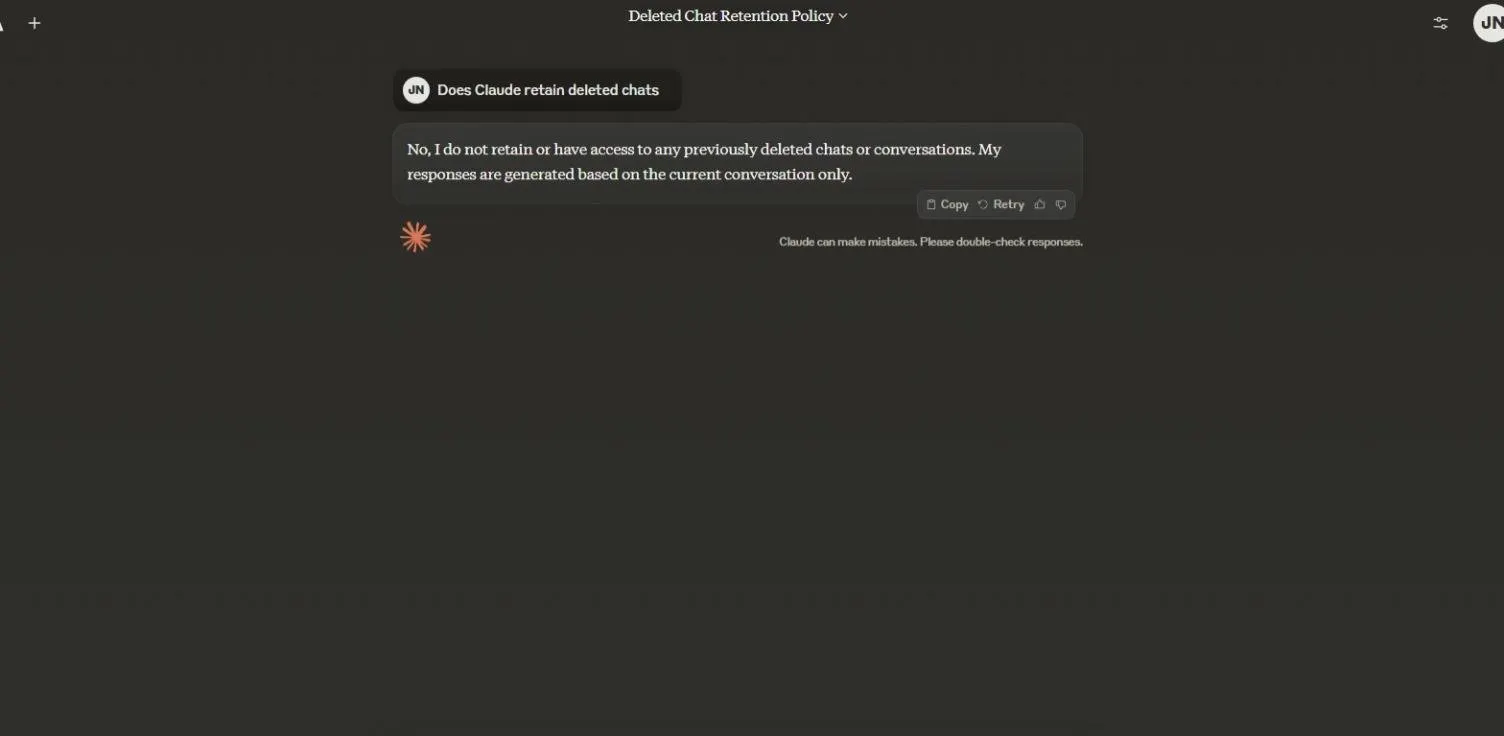

Claude

“We do not primarily train our models on user-submitted data,” an Anthropic spokesperson said. decryption. “Until now, we have not used customer- or user-submitted data to train generative models, and we explicitly state this in the model cards of the Claude 3 model family.”

“We may use user messages and output to train Claude if you give us explicit permission, such as clicking the thumbs up or thumbs down signal for a specific Claude output to provide feedback,” the company says. added. It helps the AI model “learn patterns and connections between words.”

If you delete an archived chat in Claude, you will no longer have access to it. The Claude AI agent kindly replies in the first person: “We cannot retain or access previously deleted chats or conversations.” “My responses are generated based solely on the current conversation.”

Like ChatGPT, Claude retains some information required by law.

“We also retain data on our backend systems for the period specified in our Privacy Policy, except to enforce our acceptable use policy, address violations of our Terms of Service or policies, or as required by law,” Anthropic explains.

Regarding the information Claude collects across the web, an Anthropic spokesperson said: decryption AI Developer’s web crawlers respect industry standard technical signals, such as robots.txt, that site owners can use to opt out of data collection, and Anthropic does not access password-protected pages or circumvent CAPTCHA controls.

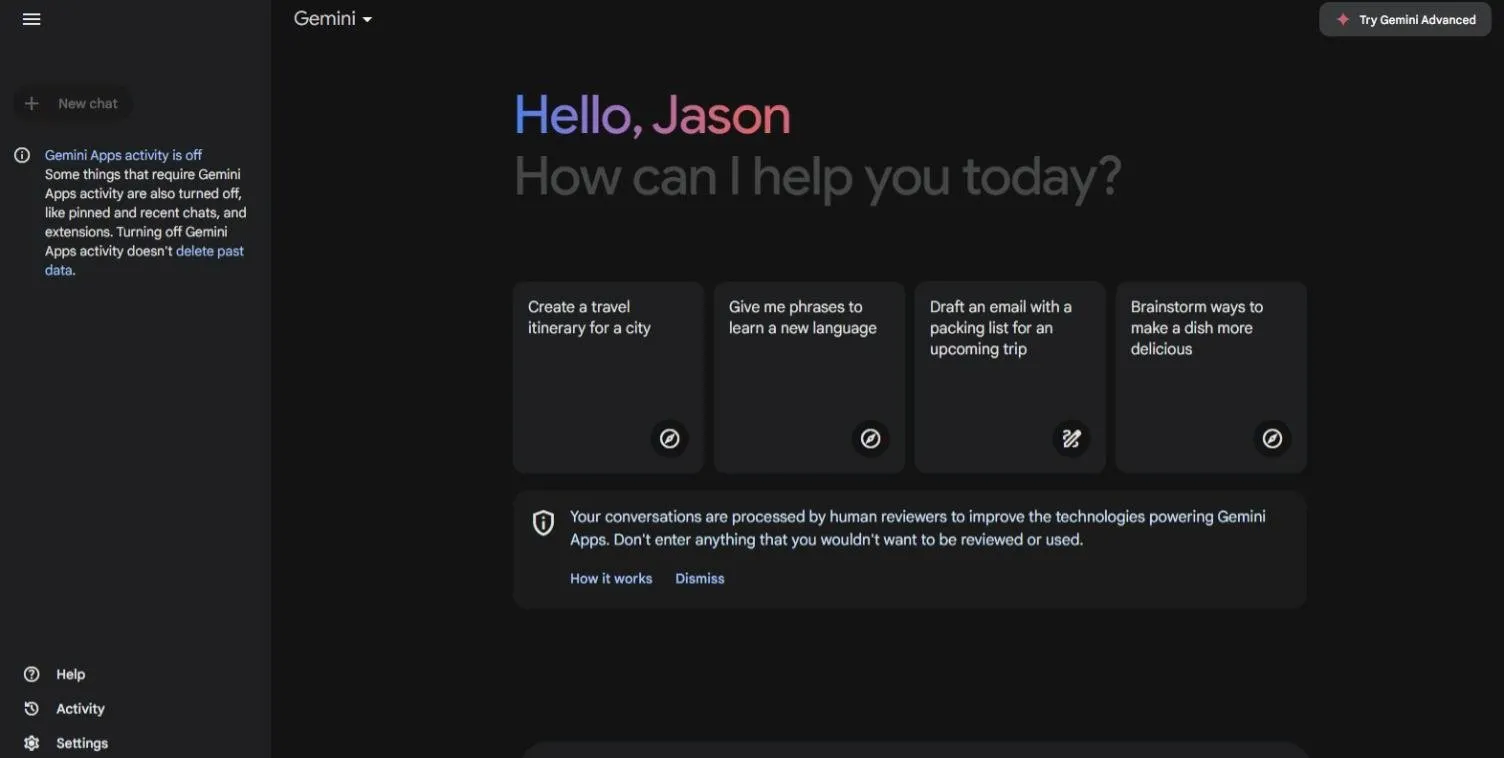

gemini

By default, Google tells Gemini users that “conversations will be processed by human reviewers to improve the technology that powers Gemini apps. “Please don’t enter anything you don’t want to review or use.”

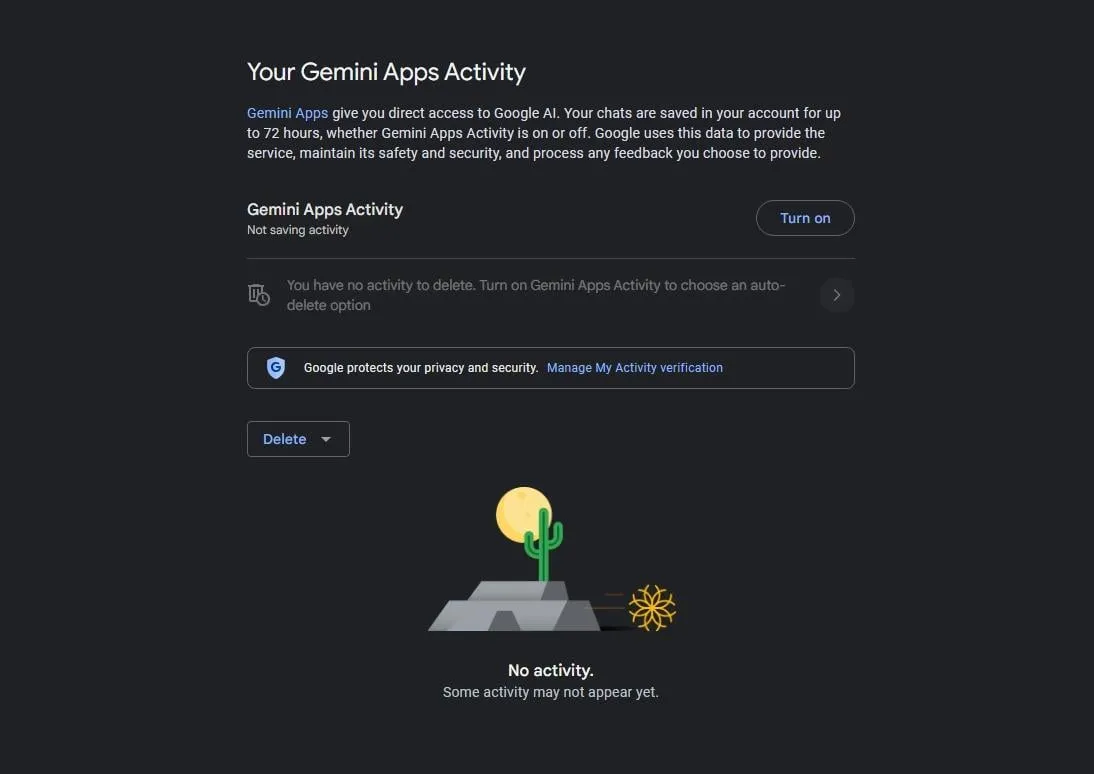

However, Gemini AI users can choose to delete their chatbot history and not have their data used for model training in the future.

To do both, go to the bottom left of the Gemini homepage and find ‘Activities’.

From the Activity screen, users can turn off “Gemini App Activity”.

A Google representative explained: decryption Features in the “Gemini App Activity” settings.

“Turning this feature off essentially prevents future conversations from being used to improve generative machine learning models,” company officials said. “In this case, your conversation will be stored for up to 72 hours to enable us to provide you with our services and address any feedback you choose to provide. During this 72-hour period, unless you choose to provide feedback in Gemini Apps, that feedback will not be used to improve Google products, including machine learning technologies.”

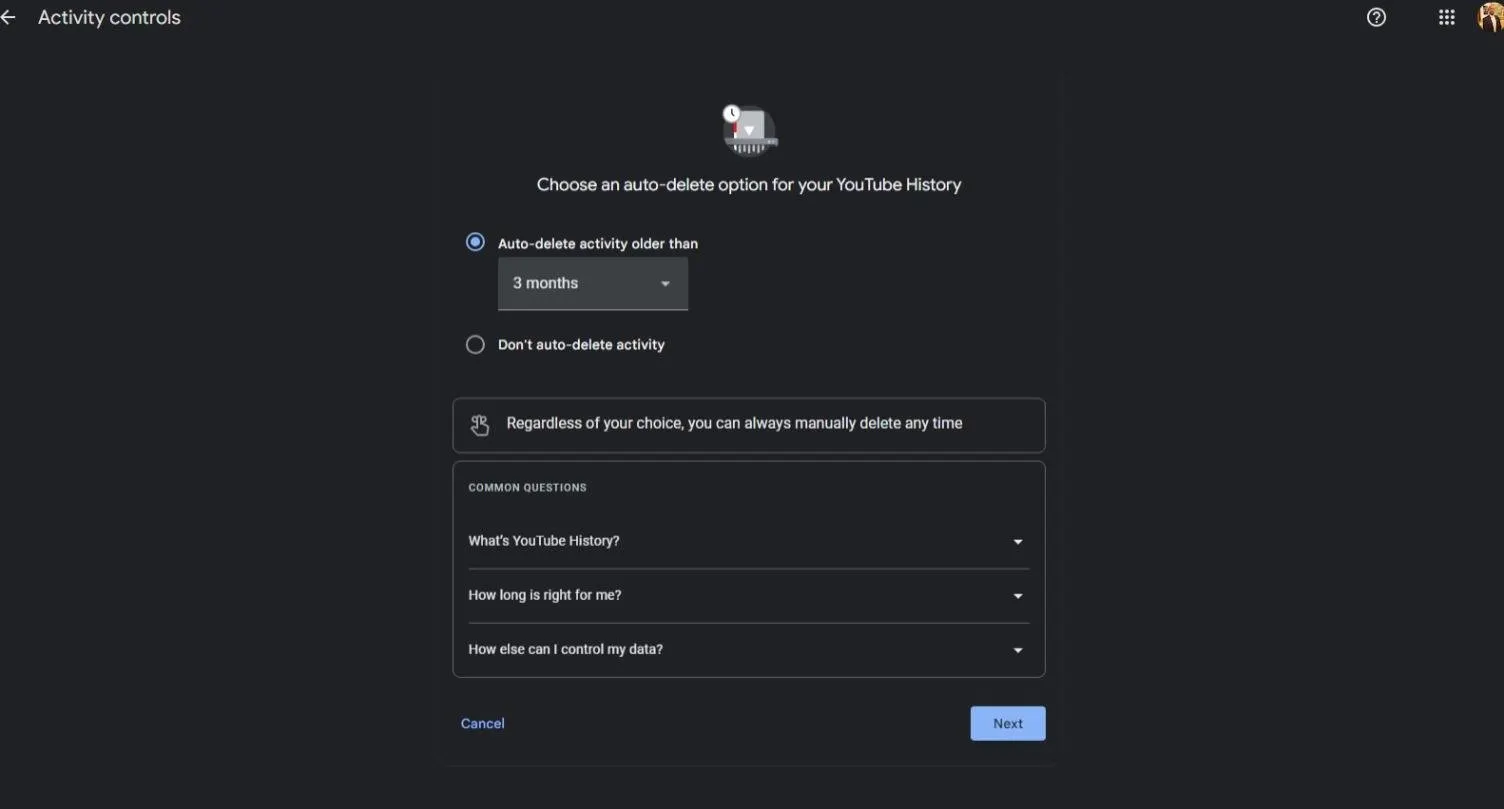

There is also a separate setting to clear YouTube history linked to Google.

co-pilot

Last September, Microsoft added Copilot-generated AI models to its Microsoft 365 suite of business tools, Microsoft Edge browser, and Bing search engine. Microsoft has also included a preview version of the chatbot in Windows 11. In December, Copilot was added to the Android and Apple app stores.

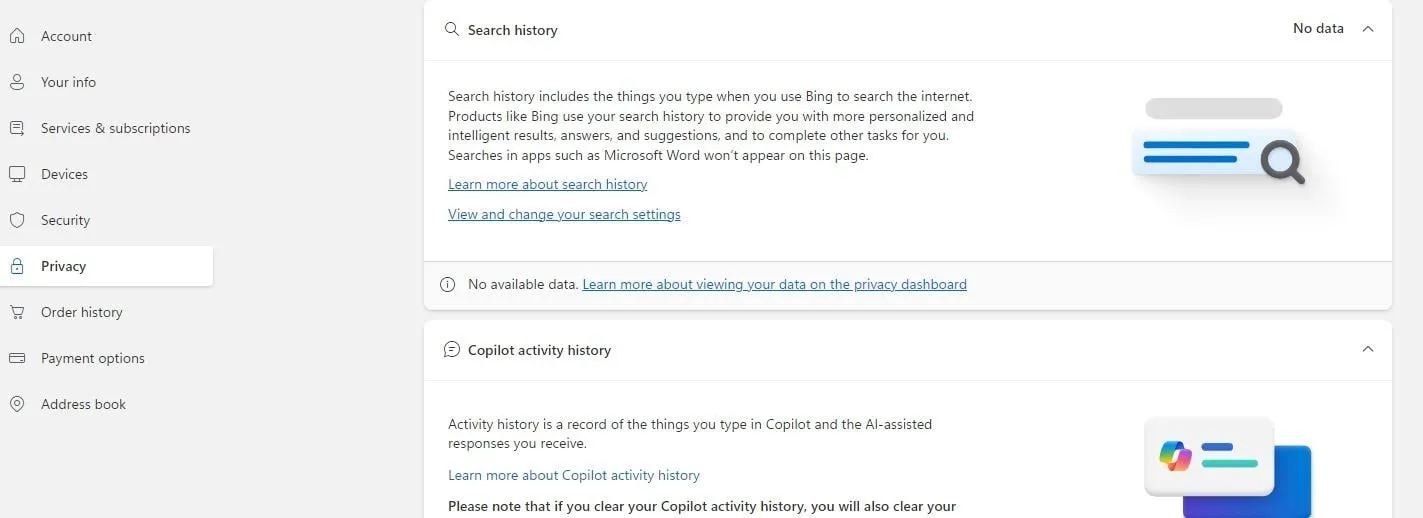

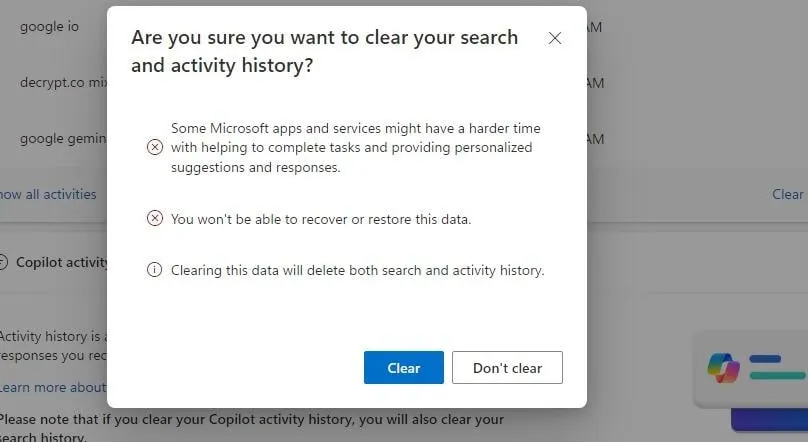

Microsoft doesn’t provide an option to opt out of having your data used to train AI models, but like Google Gemini, Copilot users can clear their history. However, the process is less intuitive in Copilot because old chats remain visible on the desktop version’s home screen even after they have been deleted.

To find the option to delete your Copilot history, open your user profile in the top right corner of the screen (you must be logged in) and select “My Microsoft Account.” Select “Privacy” on the left and scroll down to the bottom of the screen to find the Copilot section.

Because Copilot is integrated into Bing’s search engine, deleting your activity also deletes your search history, Microsoft said.

A Microsoft spokesperson said: decryption Tech giants protect consumers’ data through a variety of techniques, including encryption, de-identification, and storing and retaining only information relevant to users for as long as necessary.

“A portion of the total number of user prompts in Copilot and Copilot Pro responses is used to fine-tune the experience,” the spokesperson added. “Microsoft helps protect consumer identities by taking steps to de-identify data before it is used.” Microsoft added that it does not use any content created in Microsoft 365 (Word, Excel, PowerPoint, Outlook, Teams) to teach the underlying “base model.” .”

Meta AI

Last April, Meta, the parent company of Facebook, Instagram, and WhatsApp, launched Meta AI to users.

“We’re launching a new version of Meta AI, an assistant that can ask any question across apps and glasses,” Zuckerberg said in an Instagram video. “Our goal is to build the world’s best AI and make it available to everyone.”

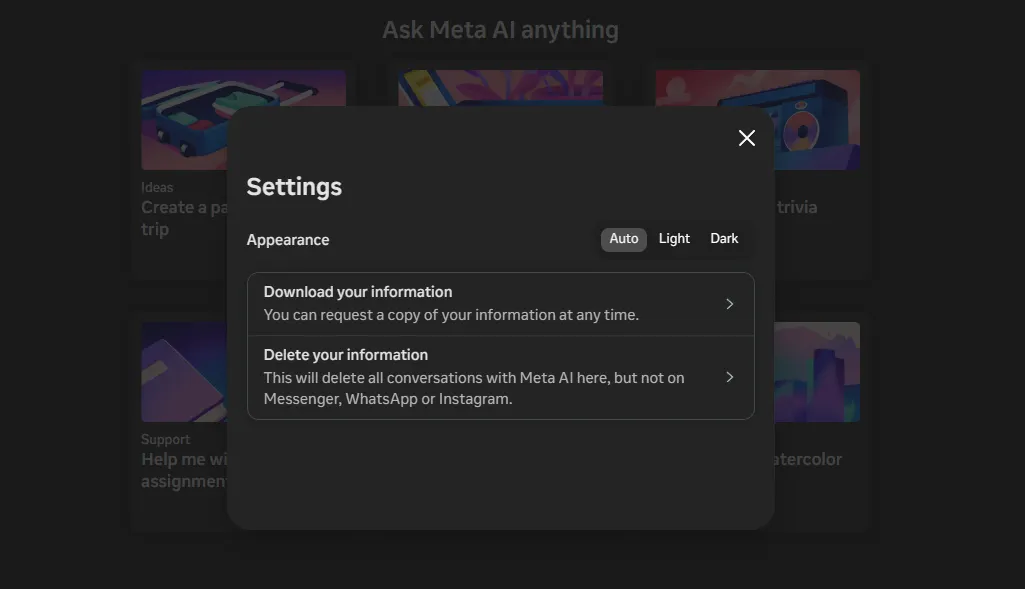

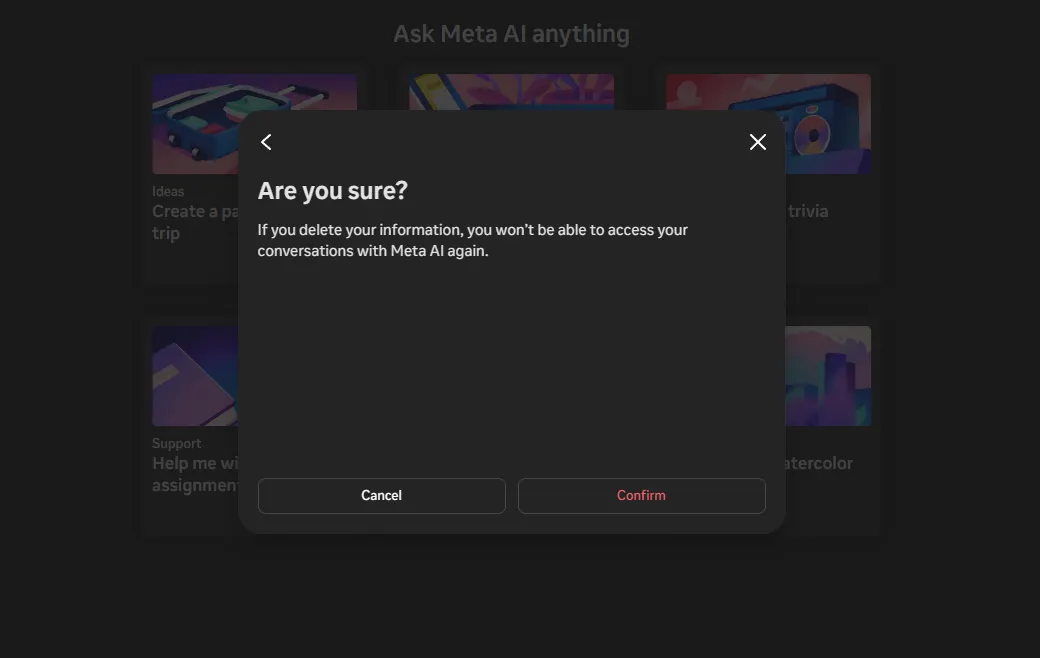

Meta AI does not give users the option to opt out of having their input used to train AI models. Meta gives you the option to delete past chats with AI agents.

To set it up on a desktop computer, click the Facebook Settings tab in the bottom left corner of your screen, above your Facebook profile image. By going into settings, users can delete their conversations with Meta AI.

Meta explains here that deleting a conversation doesn’t delete your chats with other people on Messenger, Instagram, or WhatsApp.

A Meta spokesperson declined to comment on whether and how users can opt out of having their information used to train Meta AI models. decryption Here’s the company’s September statement on its meta settings page about its privacy measures and history deletion.

“Publicly shared posts on Instagram and Facebook, including photos and text, were part of the data used to train the generative AI model,” the company explains. “We didn’t train these models using people’s private posts. Additionally, we do not use the content of private messages with friends or family to train our AI.”

However, everything you send to Meta AI is used for model training and beyond.

“We use the information people share when they interact with generative AI capabilities, such as Meta AI or companies using generative AI, to improve our products and for other purposes,” Meta adds.

conclusion

Of the major AI models included above, OpenAI’s ChatGPT provided the easiest way to clear history and opt out of chatbot prompts used to train AI models. Meta’s privacy practices appear to be the most opaque.

Many of these companies also offer mobile versions of their powerful apps that offer similar controls. Individual steps may vary, and privacy and history settings may work differently across platforms.

Unfortunately, increasing all privacy settings to the strictest level may not be enough to protect your information, according to Erik Voorhees, founder and CEO of Venice AI. decryption It would be naive to assume that your data has been deleted.

“Once a company has your information, you can’t trust that it’s gone,” he said. “People should assume that everything they write on OpenAI is passed on to them and they can own it forever.”

“The only way to solve this problem is to use a service where the information never moves to a central repository,” Voorhees added.

Edited by Ryan Ozawa.