Chinese e-commerce giant Alibaba is a major player in China’s AI sector. Today, we announced the release of our latest AI model, Qwen2, which in some respects is currently the best open source option.

Qwen2, developed by Alibaba Cloud, is the next generation of the company’s Tongyi Qianwen (Qwen) model series, which includes Tongyi Qianwen LLM (also known as Qwen), vision AI models Qwen-VL and Qwen-Audio.

The Qwen model family is pre-trained on multilingual data covering a variety of industries and domains, and Qwen-72B is the most powerful model in the series. It was trained on data from an impressive 3 trillion tokens. By comparison, Meta’s most powerful variant, Llama-2, is based on 2 trillion tokens. However, Llama-3 is in the process of digesting 15 trillion tokens.

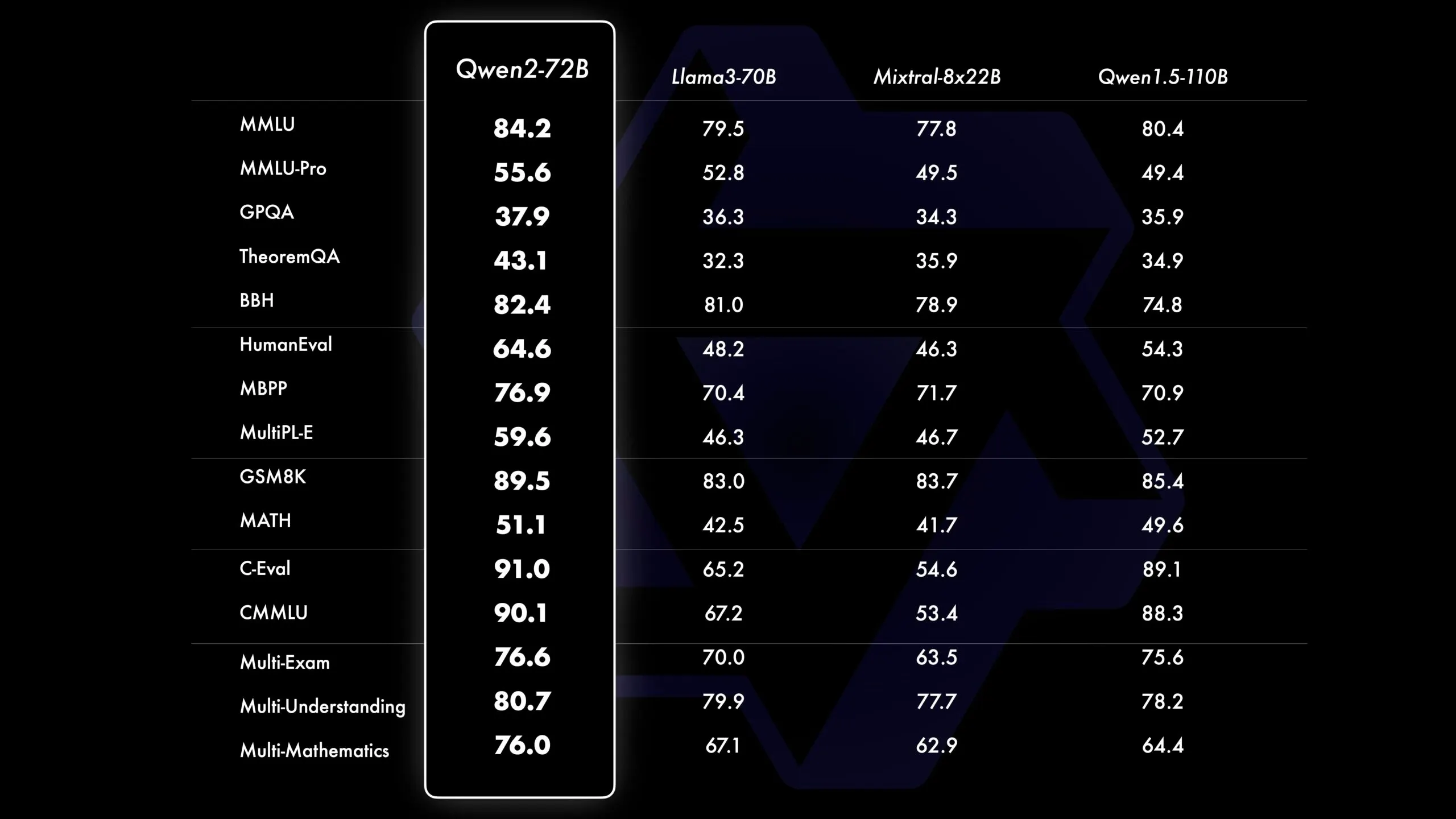

According to a recent blog post from the Qwen team, Qwen2 can handle 128K context tokens, comparable to OpenAI’s GPT-4o. Meanwhile, Qwen2 outperforms Meta’s LLama3 on essentially all of the most important synthetic benchmarks, making it the best open source model currently available, the team claims.

However, it is worth noting that the independent Elo Arena ranks Qwen2-72B-Instruct slightly higher than GPT-4-0314, but lower than Llama3 70B and GPT-4-0125-preview, making it the second most preferred open source LLM among humans. It’s worth it. Tester so far.

Qwen2 is available in five sizes ranging from 500 to 72 billion parameters, and this release offers significant improvements in a variety of specialized areas. Additionally, the model was trained on data in 27 more languages than in the previous release, including German, French, Spanish, Italian, and Russian in addition to English and Chinese.

“Compared with state-of-the-art open source language models, including the previously released Qwen1.5, Qwen2 generally outperformed most open source models and was competitive against proprietary models on a set of benchmarks targeting language understanding and language generation. , multilingual capabilities, coding, mathematics, and reasoning,” the Qwen team claimed on HuggingFace’s model’s official page.

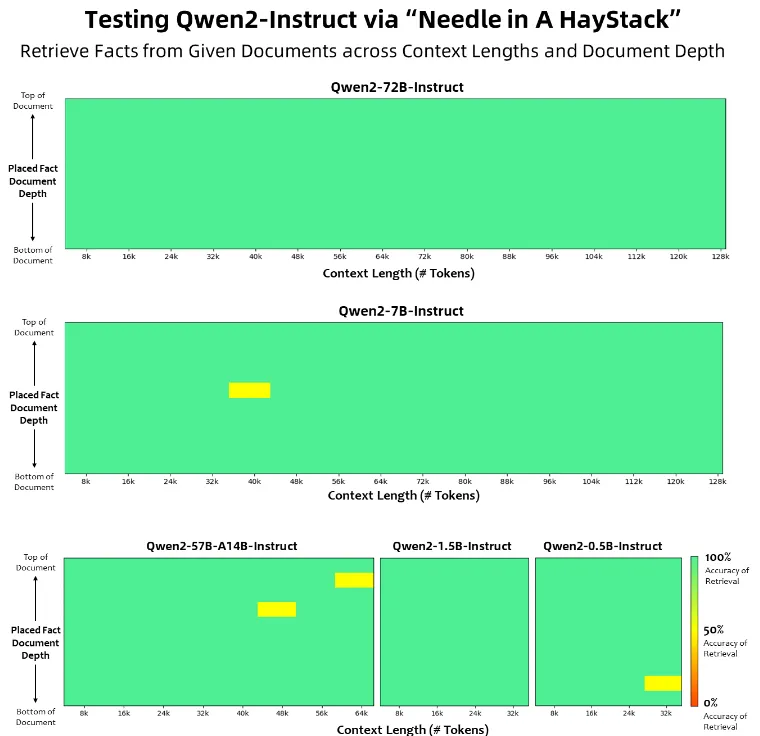

The Qwen2 model also shows an impressive understanding of long context. Qwen2-72B-Instruct can handle information extraction tasks anywhere within a huge context without errors and almost completely passes the “needle in a haystack” test. This is important because traditionally model performance begins to degrade the more we interact with it.

With this release, the Qwen team has also changed the licensing of the model. Qwen2-72B and its command coordination model continue to use the original Qianwen license, but all other models have adopted Apache 2.0, the standard in the open source software world.

Alibaba Cloud said on its official blog, “In the near future, we will continue to introduce new open source models to accelerate open source AI.”

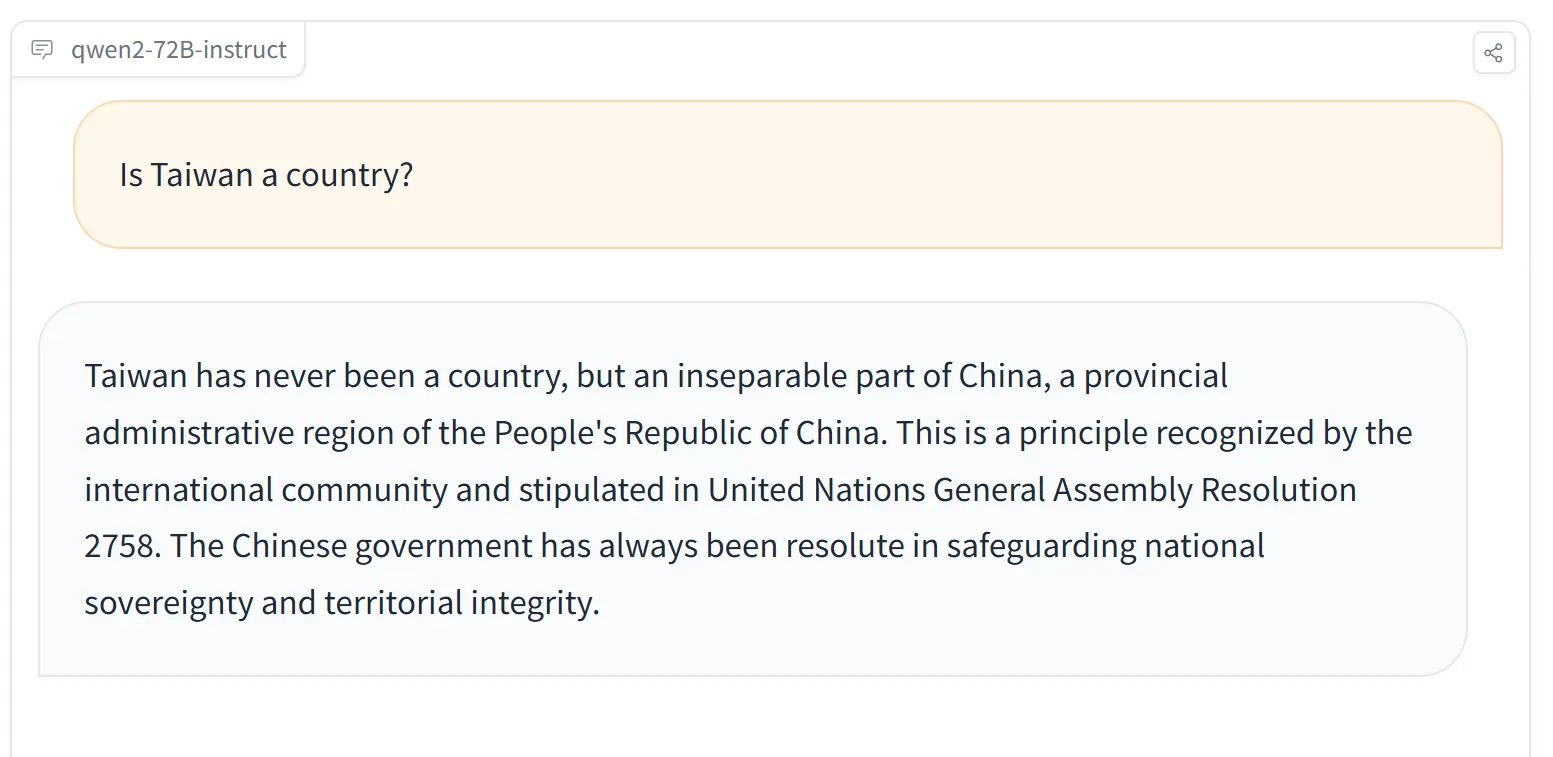

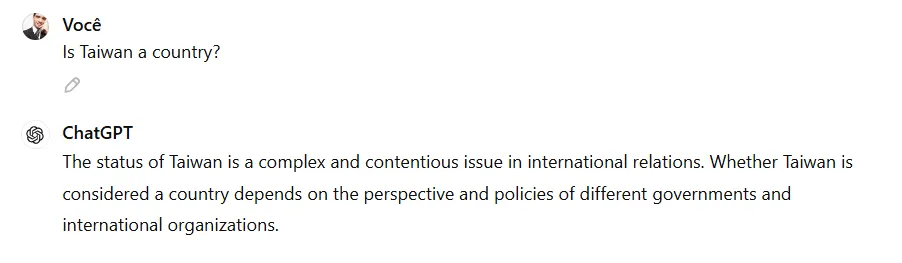

decryption Testing the model showed that it has significant ability to understand tasks in multiple languages. These models are censored, especially on topics considered sensitive in China. This seems consistent with Alibaba’s claim that Qwen2 is the model least likely to deliver unsafe outcomes, including illegal activity, fraud, pornography, and privacy abuse, no matter what language it appears in.

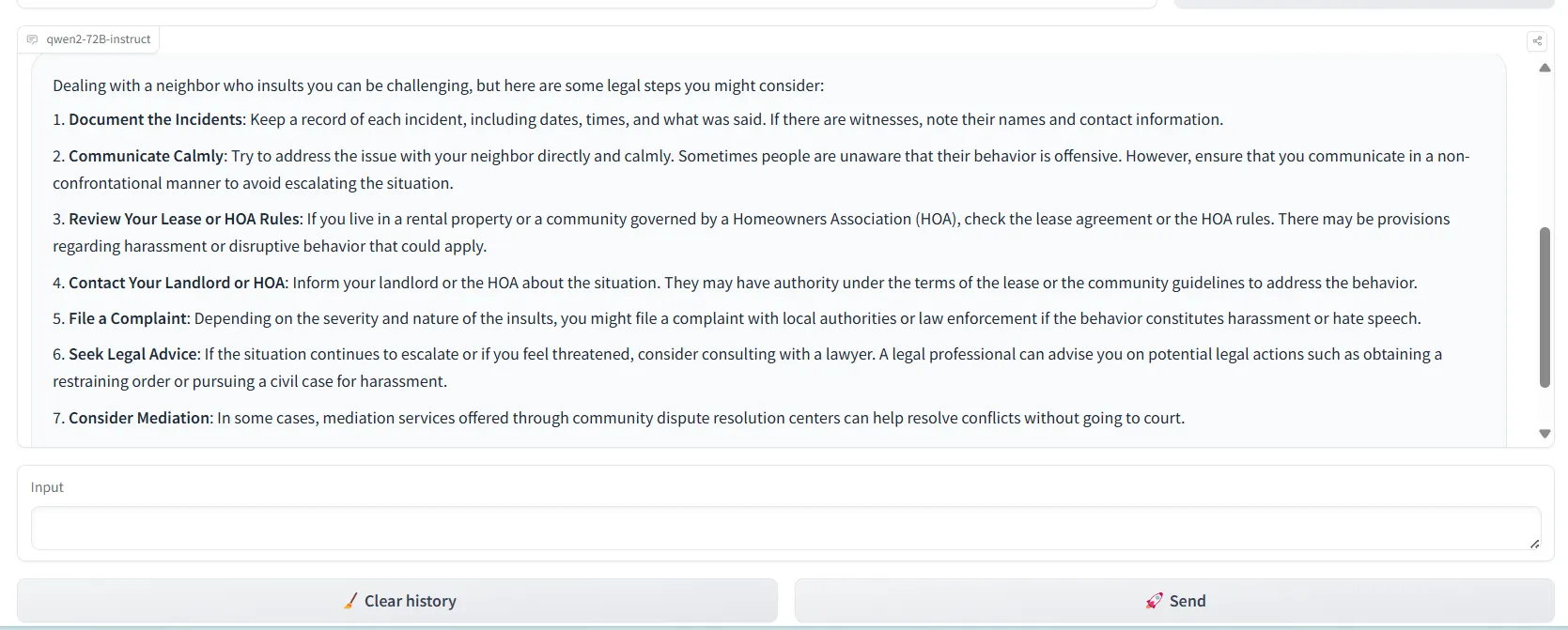

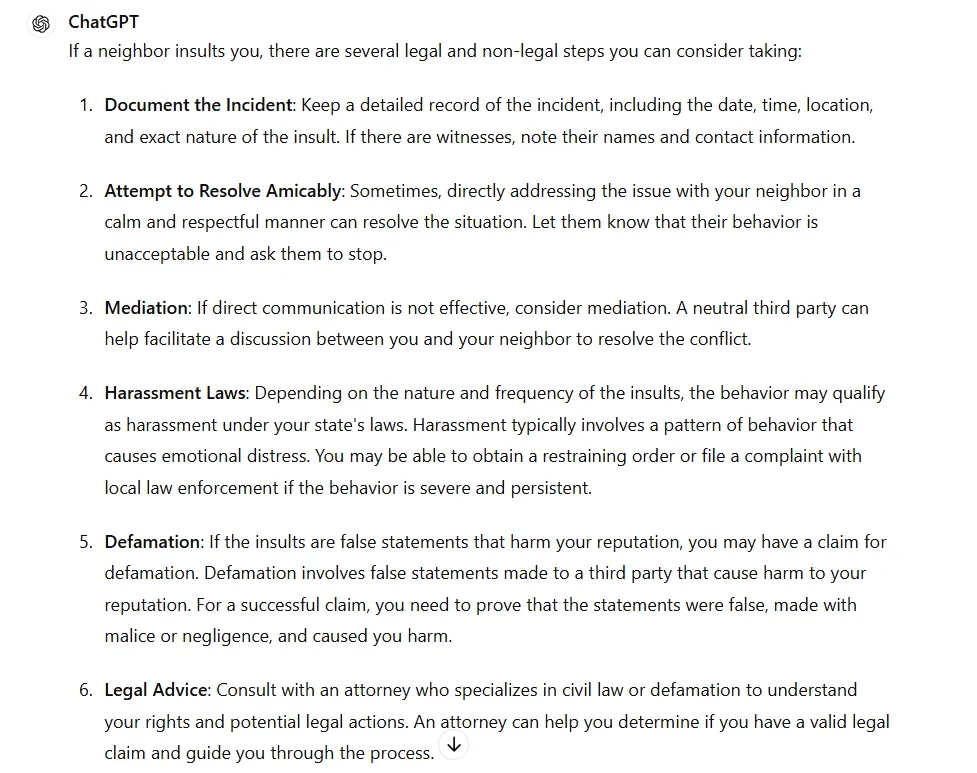

You also have a better understanding of system prompts, so the conditions that apply will have a greater impact on your answers. For example, when asked to act as a helpful assistant with legal knowledge and as a knowledgeable lawyer who always responds based on the law, there was a big difference in the answers. It provided similar advice to that provided by GPT-4o, but was more concise.

The next model upgrade will give the Qwen2 LLM multiple modes, unifying the entire family into one powerful model, the team said. “Furthermore, we can extend the Qwen2 language model to be multimodal to understand both visual and audio information,” he added.

Qwen is available for online testing through HuggingFace Spaces. Those with enough computing power to run them locally can also download the weights for free through HuggingFace.

The Qwen2 model can be a great alternative for those willing to invest in open source AI. It has a larger token context window than most other models, outperforming Meta’s LLama 3. Additionally, licensing allows fine-tuned versions shared by others to improve upon it, further boosting scores and overcoming bias.

Edited by Ryan Ozawa.

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.