It seems to be an Nvidia world these days. Everyone in technology and the exploding AI industry lives in it. Between timely market entry, cutting-edge hardware research, and a robust software ecosystem geared toward GPUs, the company is dominating AI development and the stock market. Its latest earnings report, released later today, showed that quarterly revenue tripled, further boosting the stock price.

Nonetheless, longtime rival chipmaker AMD is still working hard to gain a foothold in AI, telling builders behind key technologies in the nascent space that the work can also be done on AMD hardware.

“We wanted to remind everyone that if you use PyTorch, TensorFlow, or JAX, you can use a notebook or script and it will run on AMD,” AMD senior director Ian Ferreira said earlier at the Microsoft Build 2024 conference. Wednesday “The same goes for inference engines. BLLM and Onyx also work out of the box.”

The company took the stage to show examples of how AMD GPUs can run powerful AI models like Stable Diffusion and Microsoft Phi natively to efficiently perform compute-intensive training tasks without relying on technology or hardware from Nvidia. I spent time for it.

Conference organizer Microsoft reinforced its message by announcing the availability of AMD-based virtual machines on its Azure cloud computing platform using the company’s accelerated MI300X GPUs. The chip was announced last June, began shipping in the new year, and was recently implemented in Microsoft Azure’s OpenAI service and Hugging Face’s infrastructure.

Nvidia’s proprietary CUDA technology, which includes a full programming model and API designed specifically for Nvidia GPUs, has become the industry standard for AI development. So AMD’s main message is that its solution can fit right into the same workflow.

Seamless compatibility with existing AI systems can be a game changer. That’s because developers can now take advantage of AMD’s affordable hardware without overhauling their codebase.

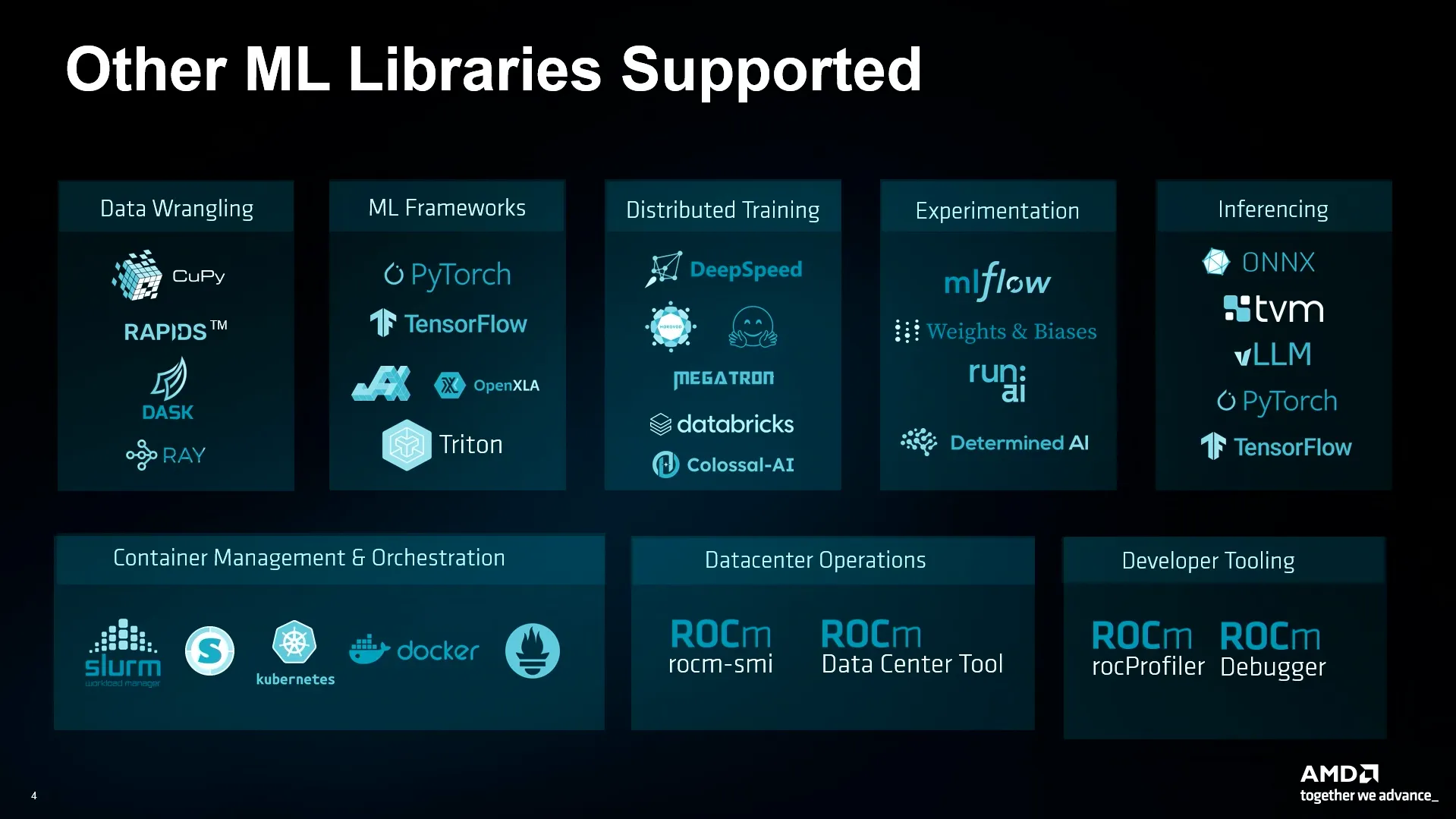

“Of course, we recognize that we need more than a framework, we need upstream elements, experimental elements, distributed training. All of this is enabled and functional on AMD,” Ferreira assured.

He then demonstrated how AMD handles a variety of tasks, from running small models like ResNet 50 and Phi-3 to fine-tuning and training GPT-2. They all use the same code that the Nvidia card runs.

One of the key advantages AMD highlights is its ability to efficiently process large language models.

“You can load up to 70 billion parameters on one GPU, and this instance contains eight of them,” he explained. “You can load eight different Llama 70Bs or deploy larger models like the Llama-3 400Bn on a single instance.”

Challenging Nvidia’s dominance will not be an easy task. That’s because Nvidia, based in Santa Clara, California, has fiercely protected its territory. Nvidia has already taken legal action against projects that seek to provide a CUDA compatibility layer for third-party GPUs like AMD, claiming that this violates CUDA’s terms of service. This has limited the development of open source solutions and made it more difficult for developers to embrace alternatives.

AMD’s strategy to circumvent Nvidia’s blockade is to leverage the open source ROCm framework, which competes directly with CUDA. The company has made significant progress in this regard by partnering with Hugging Face, the world’s largest open source AI model repository, to support running code on AMD hardware.

AMD has already achieved promising results with this partnership by providing native support and additional acceleration tools such as running ONNX models on ROCm-based GPUs, Optimum-Benchmark, DeepSpeed for ROCm-based GPUs using Transformers, GPTQ, TGI, and more.

Ferreira also pointed out that these integrations are native, eliminating the need for third-party solutions or intermediaries that can make the process less efficient.

“You can run existing laptops and existing scripts on AMD, which is important, because many other accelerators require transcoding and all kinds of precompiled scripts,” he said. “Our product works out of the box and is really fast.”

AMD’s move is undoubtedly bold, but displacing Nvidia will be quite a challenge. Rather than rest on its laurels, Nvidia continues to innovate and make it difficult for developers to migrate from the de facto CUDA standard to new infrastructure.

However, AMD’s focus on open source approach, strategic partnerships, and native compatibility positions it as a viable alternative for developers looking for more options in the AI hardware market.

Edited by Ryan Ozawa.

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.