After seemingly keeping a low profile for most of the past year, Apple is starting to make a difference in the area of artificial intelligence, especially open source AI.

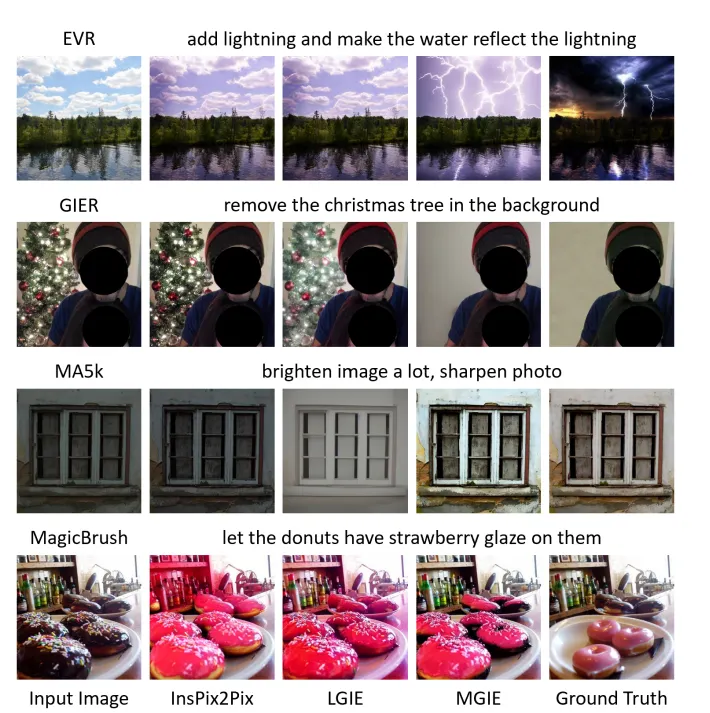

The Cupertino-based tech giant partnered with the University of Santa Barbara to develop an AI model that can edit images based on natural language in the same way people interact with ChatGPT. Apple calls this Multimodal Large-Language Model-Guided Image Editing (MGIE).

MGIE interprets the text instructions provided by the user, processes and refines them to generate accurate image editing commands. Incorporating a diffusion model improves the process, allowing MGIE to apply edits based on the characteristics of the original image.

The Multimodal Large Language Model (MLLM), which can handle both text and images, forms the basis of the MGIE method. Unlike traditional single-mode AI, which only focuses on text or images, MLLM can handle complex commands and operate in a wider range of situations. For example, a model can understand text instructions, analyze elements of a particular photo, and then pull something out of the image to create a new photo without that element.

To accomplish these tasks, AI systems must have a variety of capabilities, including generative text, generative images, segmentation, and CLIP analysis, all in the same process.

The introduction of MGIE brings Apple closer to achieving similar functionality to OpenAI’s ChatGPT Plus. It allows users to engage in conversational interactions with AI models to create customized images based on text input. MGIE allows users to provide detailed instructions in natural language (“remove traffic cones from the foreground”), which are translated into image editing commands to execute.

That is, users can start with a photo of a blonde person and change it to a ginger by saying “Make this person a redhead.” Internally, the model understands the instructions, segments human hair, generates commands such as “red hair, highly detailed, realistic, ginger tone,” and then executes the changes through inpainting.

Apple’s approach is consistent with existing tools such as Stable Diffusion. This tool can be expanded into a basic interface for text-based image editing. Third-party tools like Pix2Pix allow users to interact with the Stable Diffusion interface using natural language commands to see real-time effects on their edited images.

However, Apple’s approach has proven to be more accurate than other similar methods.

In addition to generative AI, Apple MGIE can perform other traditional image editing tasks such as color grading, resizing, rotating, re-styling, and sketching.

Why did Apple make it open source?

Apple’s move into open source is clearly a strategic move with scope beyond simple licensing requirements.

To build MGIE, Apple uses open source models such as Llava and Vicuna. The licensing requirements of these models, which limit commercial use by large companies, would have forced Apple to share their improvements publicly on GitHub.

But it also allows Apple to tap into the global pool of developers to increase its strength and flexibility. This kind of collaboration makes things happen much faster than if Apple had to do it entirely on its own and start from scratch. This openness also allows MGIE to advance faster by inspiring a broader spectrum of ideas and attracting diverse technical talent.

Apple’s involvement in the open source community through projects like MGIE also helps increase brand awareness among developers and tech enthusiasts. These aspects are no secret. Both Meta and Microsoft are investing heavily in open source AI.

Releasing MGIE as open source software allows Apple to take the lead in setting still-evolving industry standards, especially for AI and AI-based image editing. With MGIE, Apple will have given AI artists and developers a solid foundation on which to build the next generation of products, delivering greater accuracy and efficiency than anywhere else.

MGIE will definitely make Apple products better. It’s not that difficult to synthesize voice commands sent to Siri and use that text to edit photos on the user’s smartphone, computer, or internal headset.

Technically proficient AI developers can start using MGIE today. Visit the project’s GitHub repository.

Edited by Ryan Ozawa.