To stem the spread of child sexual abuse material (CSAM), a coalition of leading generative AI developers, including Google, Meta, and OpenAI, have pledged to enforce safeguards for emerging technologies.

The group is united by two non-profit organizations: children’s technology group Thorn and New York-based All Tech is Human. Thorn, formerly known as the DNA Foundation, was founded in 2012 by actors Demi Moore and Ashton Kutcher.

This joint pledge was released Tuesday alongside a new Thorn report advocating “safe by design” principles in generative AI development that prevent the creation of child sexual abuse material (CSAM) throughout the entire life cycle of an AI model.

“We urge all companies that develop, deploy, maintain, and use generative AI technologies and products to adopt these design-driven principles and demonstrate a commitment to preventing the creation and spread of CSAM, AIG-CSAM, and other child sexual abuse acts. I urge you. Abuse and exploitation,” Thorn said in a statement.

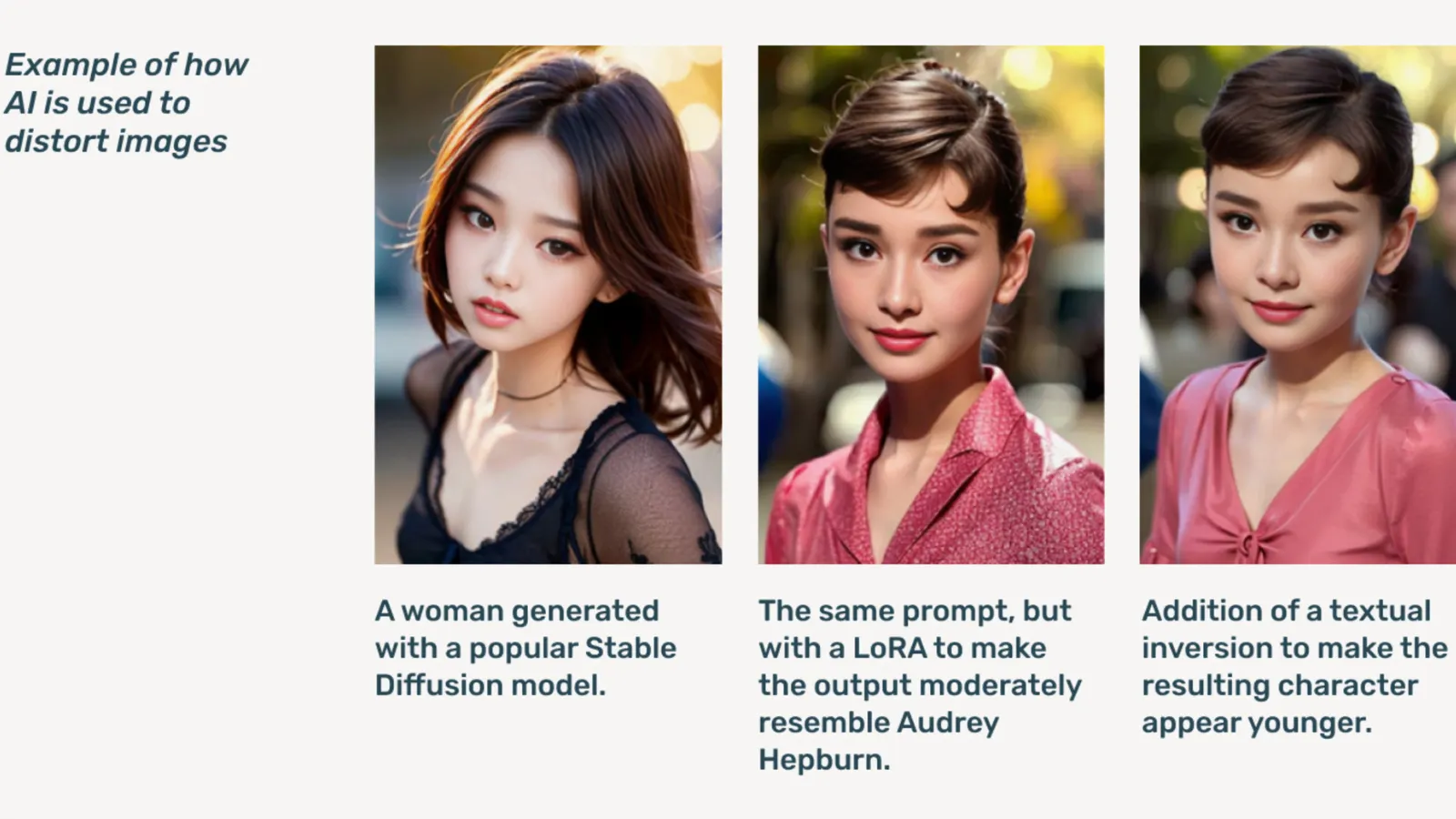

AIG-CSAM is an AI-generated CSAM that, according to the report, can be relatively easy to create.

Thorn develops tools and resources focused on protecting children from sexual abuse and exploitation. In its 2022 impact report, the organization said it had discovered more than 824,466 files containing child abuse material. Last year, Thorn reported that more than 104 million suspicious CSAM files were reported in the United States alone.

Deepfake child pornography, already a problem online, has proliferated as generative AI models have become publicly available and standalone AI models that do not require cloud services have been disseminated on dark web forums.

Thorn said generative AI makes it easier than ever to create large amounts of content. A single child sex offender can potentially create large amounts of child sexual abuse material (CSAM), including adapting original images and videos into new content.

“The influx of AIG-CSAM poses a significant risk to an already strained child safety ecosystem and further increases the challenges law enforcement faces in identifying and rescuing existing victims of abuse and escalating new victimization to more children. It makes it worse,” says Thorn.

Thorn’s report outlines steps generative AI developers should follow to prevent their technology from being used to produce child pornography, including responsible sourcing of training datasets, incorporating feedback loops and stress testing strategies, and using content history or “sources” of adversarial misuse. Briefly outlines a set of principles. Host your AI models responsibly with that in mind.

Other companies that have signed the pledge include Microsoft, Anthropic, Mistral AI, Amazon, Stability AI, Civit AI and Metaphysic, each issuing separate statements today.

“Part of Metaphysic’s ethos is responsible development in the world of AI. It’s about empowerment, but it’s also about responsibility,” said Alejandro Lopez, Chief Marketing Officer at Metaphysic. decryption. “We are quick to recognize that getting started and moving forward means protecting literally the most vulnerable group in our society – children – and unfortunately, the darkest end of this technology, which is being used for child sexual abuse material in the form of deepfake pornography. do. , that’s what happened.”

Launched in 2021, Metaphysic rose to prominence last year after it was revealed that several Hollywood stars, including Tom Hanks, Octavia Spencer and Anne Hathaway, were using Metaphysic Pro technology to digitize the properties of their likenesses in order to retain ownership of the properties they needed. indicated. Train your AI model.

OpenAI declined to comment further on these plans, instead providing the following: decryption This is a public statement from Child Safety Director Chelsea Carlson.

“We care deeply about the safety and responsible use of our tools,” Carlson said in a statement. That’s why we’ve built strong guardrails and safety measures into ChatGPT and DALL-E.” “We are committed to working with Thorn, All Tech is Human and the broader tech community to maintain safety-centered design principles and continue our work to mitigate potential harm to children.”

decryption We reached out to other members of the coalition but did not immediately hear back.

“At Meta, we’ve been working to keep people safe online for over a decade. Over that time, we have developed numerous tools and capabilities to help prevent and combat potential harm, and we have also continued to adapt as predators attempt to evade our protection,” Meta said in a prepared statement.

“Across our products, we combine hash matching technology, artificial intelligence classifiers, and human review to proactively detect and remove CSAE material,” Susan Jasper, vice president of trust and safety solutions at Google, said in a post. “Our policies and protections are designed to detect all types of CSAEs, including AI-generated CSAM,” she said. When we identify malicious content, we take appropriate action, including removing it and reporting it to NCMEC.”

Last October, British watchdog the Internet Watch Foundation warned that AI-generated child abuse material could ‘overwhelm’ the internet.

Edited by Ryan Ozawa.