Are you ready to blur the lines between reality and AI-created art?

If you follow the generative AI space, especially image generation, you might be familiar with Stable Diffusion. This open source AI platform will spark a creative revolution, empowering artists and enthusiasts alike to explore the realm of human creativity for free, from their own computers.

With simple prompts you can get picturesque landscapes, fantasy illustrations, 3D creatures or cartoons. But the feature that really makes them stand out is these tools’ ability to produce incredibly realistic images.

But doing so requires some finesse and attention to detail that regular models sometimes lack. Some avid users can quickly tell when an image was created using MidJourney or Dall-e just by looking at it. But Stable Diffusion’s versatility in creating images that trick the human brain is unrivaled.

From meticulous handling of color and composition to their incredible ability to convey human emotions and expressions, some custom models are redefining what is possible in the world of generative AI. Here are some special models we think: best Creating Hyperrealistic Images through Stable Diffusion.

We used the same prompts for all models and did not use LoRas (low-rank adaptive add-on modifier) to allow for a fairer comparison. Our results were based on prompts and text embeddings. We also used incremental changes to test small changes in our generation.

prompt

Our positive message is: Professional photography, close-up portrait photo of caucasian man wearing black sweater, serious face, dramatic lighting, nature, gloomy and cloudy weather, bokeh

Our negative prompts (instructing Stable Diffusion on what not to create) are: contains:BadDream, contains:UnrealisticDream, contains:FastNegativeV2, contains:JuggernautNegative-neg, (deformed iris, deformed pupil, semi-realistic, cgi, 3d, render, sketch, cartoon, draw, animation:1.4), text, crop , out of frame, worst quality, low quality, jpeg artifact, ugly, duplicate, pathological, mutilated, extra finger, mutant hand, poorly drawn hand, poorly drawn face, mutant, deformed, blurry, dehydrated, bad anatomy. , bad proportions, extra limbs, cloned face, deformed, gross proportions, misshapen limbs, missing arms, missing legs, added arms, added legs, fingers fused, too many fingers, long neck, insert: negative_hand-neg.

All resources used are listed at the end of this document.

Stable Diffusion 1.5: AI Veterans Aging Gracefully

The Stable Diffusion 1.5 is like an old American muscle car that beat cooler, newer model cars in a drag race. The developers tinkered with SD1.5 for so long that they buried Stable Diffusion 2.1 in the ground. In fact, even today, many users prefer this version over the two-generation newer SDXL.

This model will be your new best friend when it comes to creating images that are virtually indistinguishable from real photographs.

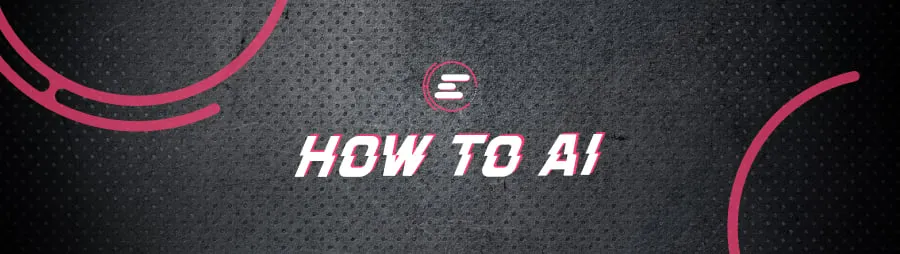

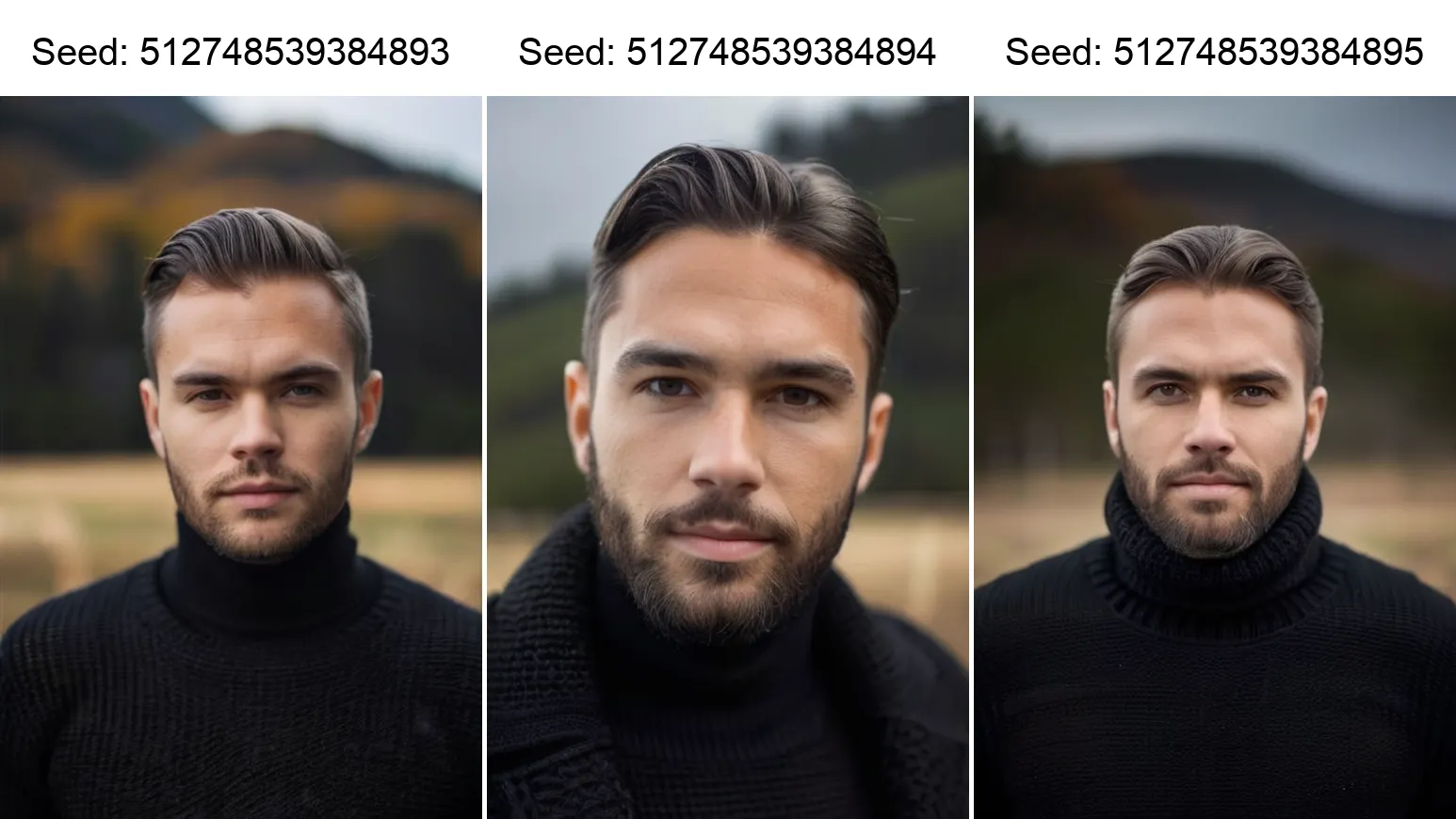

1. Juggernaut Albon

Juggernaut Rborn is a fan favorite with its realistic color scheme and impressive subject-to-background distinction. This model is especially good at producing high-quality skin detail, hair, and bokeh effects in portraits.

The latest version has been fine-tuned to deliver even better results. Juggernaut has always offered more realistic color schemes than the saturated and unnatural colors of other Stable Diffusion models. That generation tends to be warmer and more washed-out, as are unedited RAW photos.

Some adjustments are still required to get the best results. We use a DPM++ 2M Karras sampler, set to about 35 steps, and set the average CFG scale to 7.

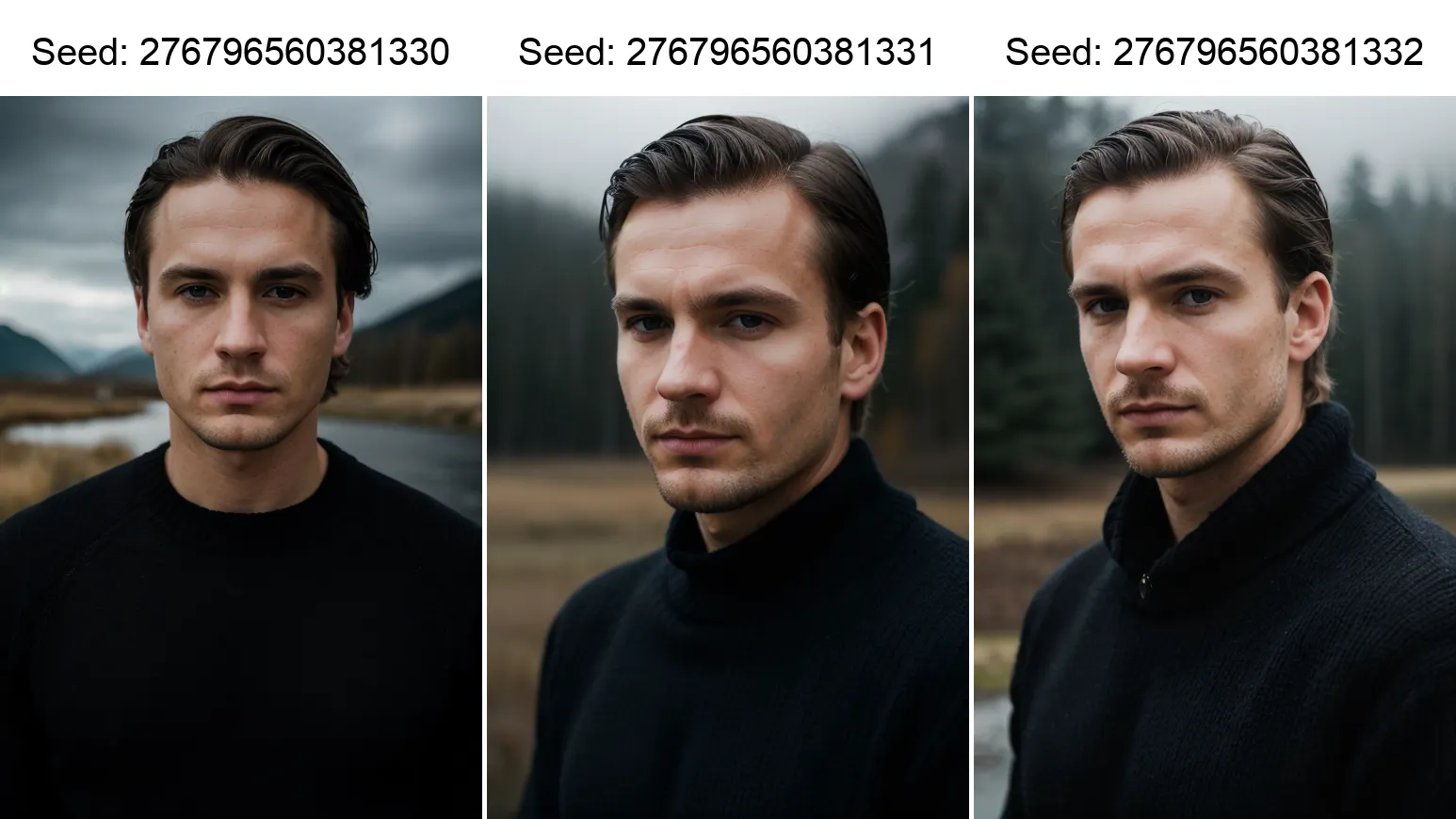

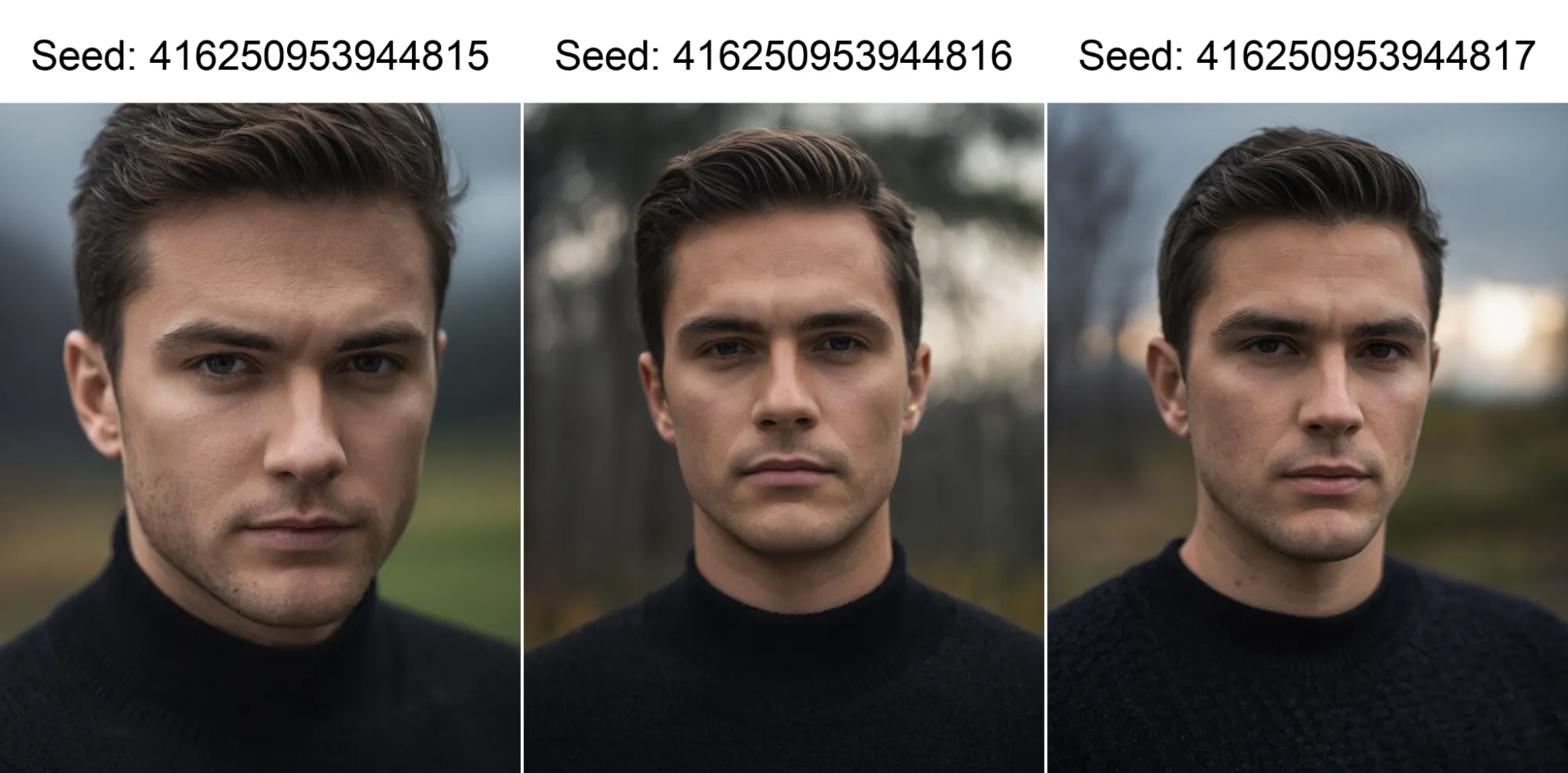

2. Realistic Vision v5.1

A true pioneer in photorealistic image creation, Realistic Vision v5.1 marks a pivotal moment in the evolution of Stable Diffusion, allowing it to compete in realism with MidJourney and all other models. The v5.1 version excels at capturing facial expressions and blemishes, making it the best choice for portrait enthusiasts. It also handles emotions well and focuses more on the subject than the background, ensuring the end result is always realistic. This model is a popular choice thanks to its impressive performance and versatility.

There is a newer version (v6.0), but I like V5.1 better because I think the small details that are important for realistic images are still better. Things like skin, hair and nails tend to look more convincing in 5.1, but other than that the results are similar and the improvements seem to be gradual.

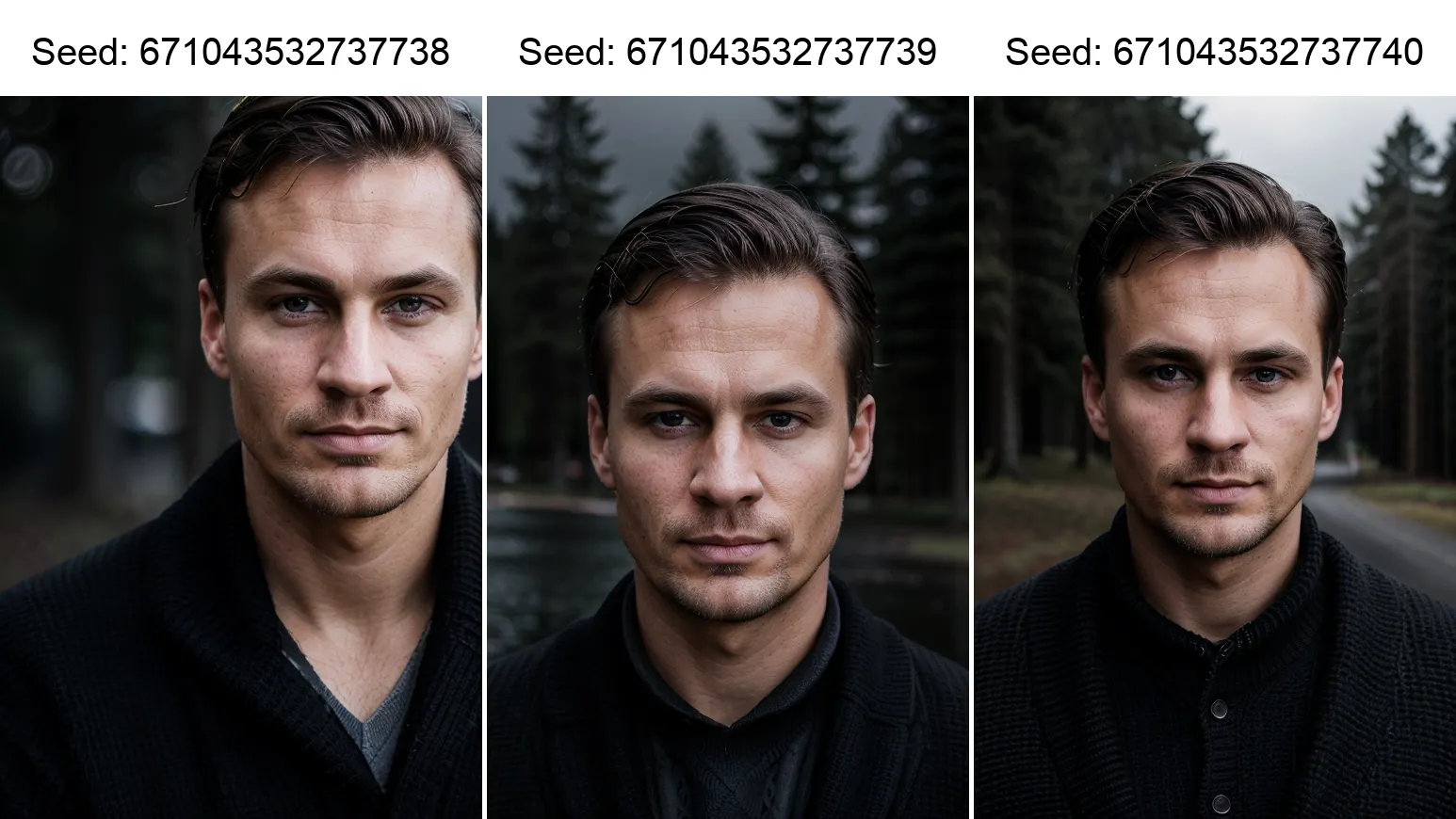

3. I can’t believe it’s not a photo

With its versatility and impressive lighting effects, the cheekily named I Can’t Believe It’s Not Photography model is a great all-round option for creating surreal images. It’s very creative, handles different angles well, and can be used on a variety of subjects, not just people.

This model performs particularly well at 640×960 resolution, which is higher than the original SD1.5, but can also deliver excellent results at SDXL native resolution level of 768×1152.

For optimal results, use the DPM++ 3M SDE Karras or DPM++ 2M Karras sampler, 20 to 30 steps and 2.5 to 5 CFG scale (lower than usual).

standing statue:

Photon V1: This versatile model excels at producing realistic results for a variety of subjects, including people.

realistic stock photos: If you want to create a sophisticated and perfect look like a stock photo, this model is an excellent choice. Creates convincing and accurate images without skin imperfections.

Azobia Photoreal: Although not as well known, this model produces impressive results and can improve the performance of other models when merged with training recipes.

Stable Diffusion XL: Versatile Visionaries

Stable Diffusion 1.5 is the best choice for realistic images, but Stable Diffusion XL offers more versatility and higher-quality results without using tricks like upscaling. It requires some power, but can be run with a GPU with 6GB of vRAM, 2GB less than the SD1.5 requirement.

We introduce the models that are at the forefront.

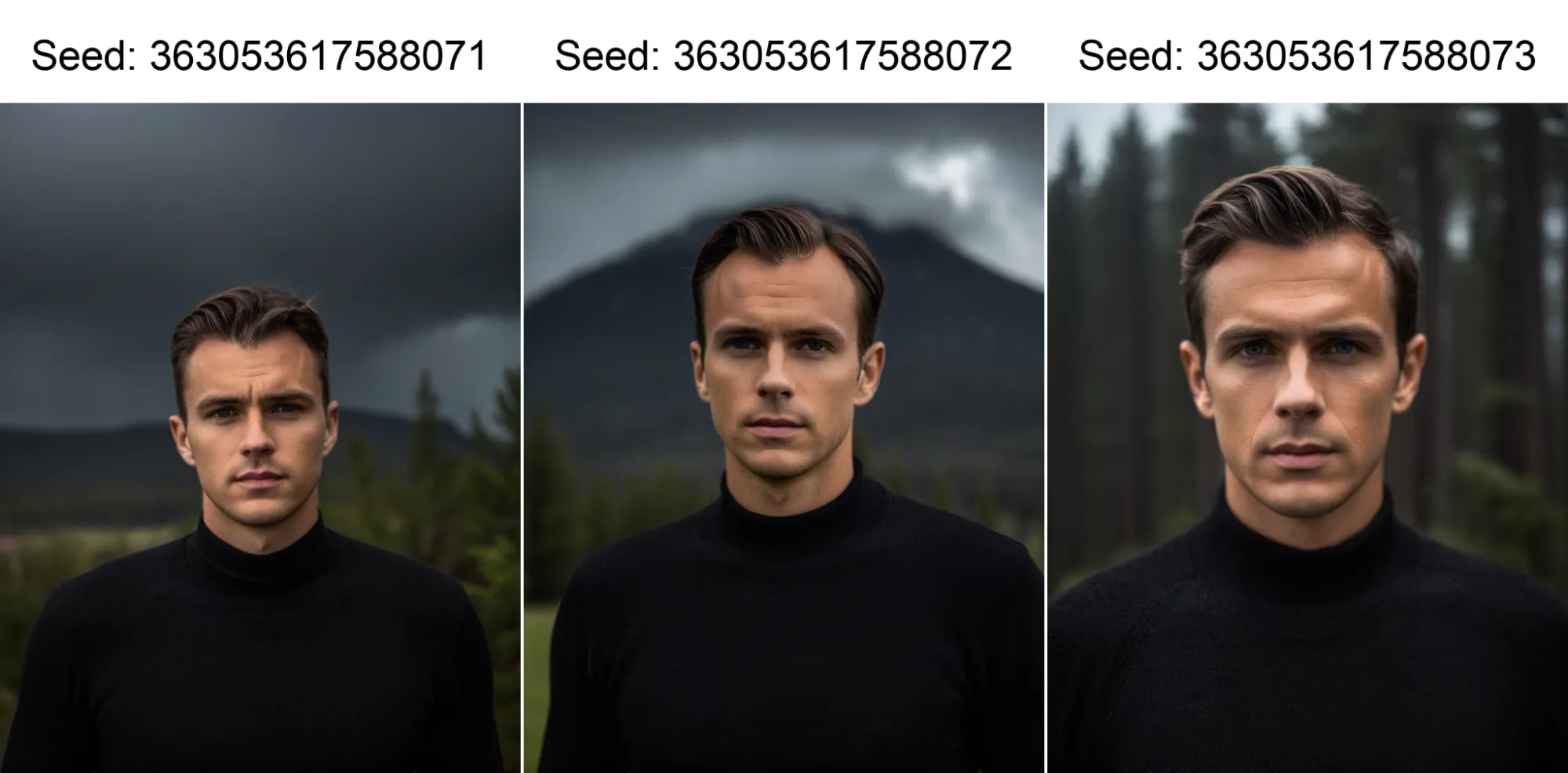

1. Juggernaut XL (Version x)

Building on the success of its predecessor, the Juggernaut XL gives the Stable Diffusion XL a cinematic look and impressive thematic focus. This model offers the same characteristic color composition, free from saturation, along with good body proportions and the ability to understand long messages. It focuses more on the theme and defines the factions very well. As all current SDXL models can do.

For best results, use 832×1216 (for portraits) resolution, DPM++ 2M Karras sampler, steps 30-40, and low CFG scale of 3-7.

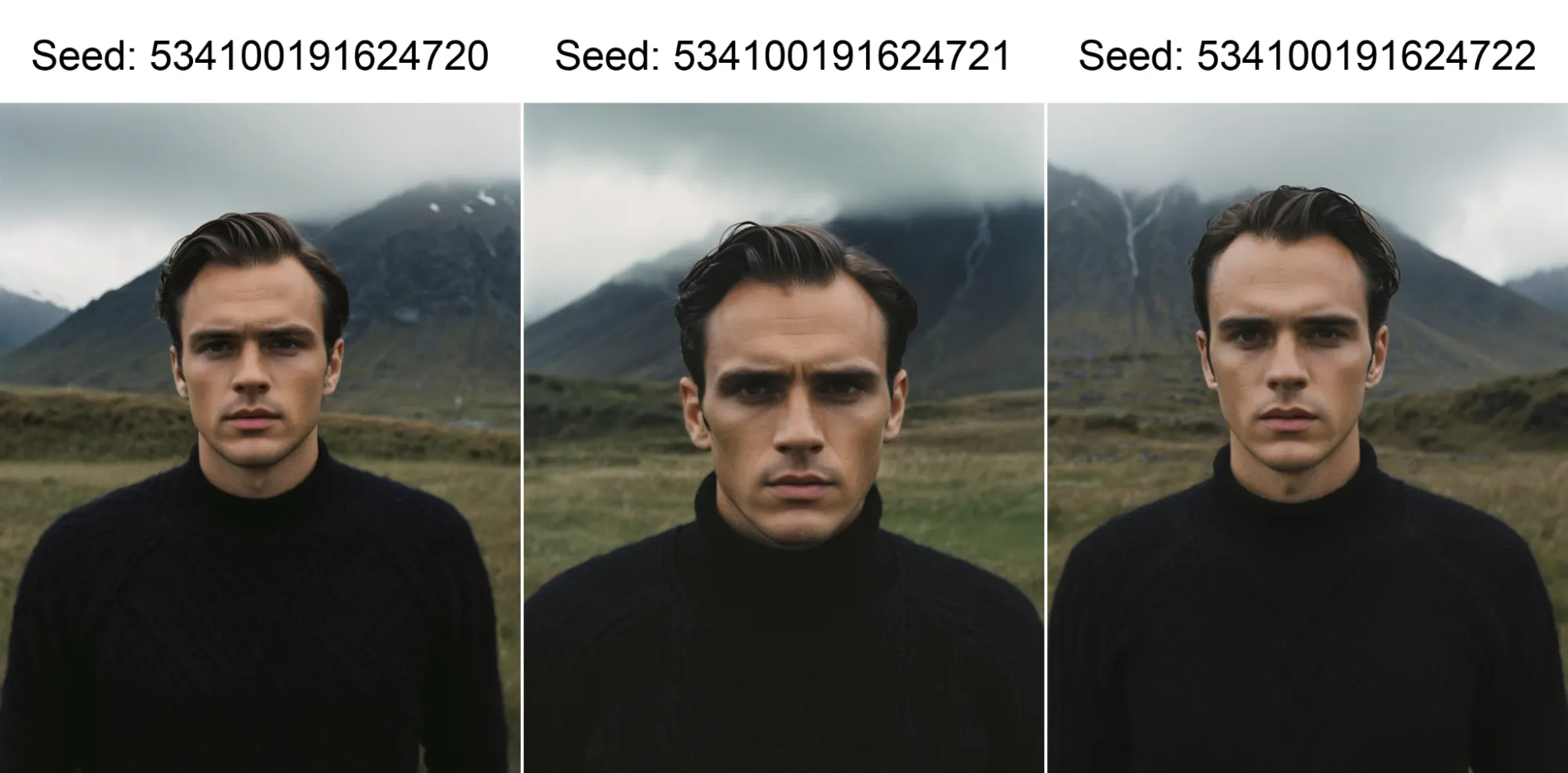

2. Realvis XL

Tailor-made with realism in mind, RealVisXL is the best choice for capturing the subtle flaws that make us human. Excellent for contouring skin lines, moles, changing tone, and creating chin, ensuring a solid final result every time. It is probably the best model for generating realistic humans.

For optimal results, use 15 to 30 or more sampling steps and the DPM++ 2M Karras sampling method.

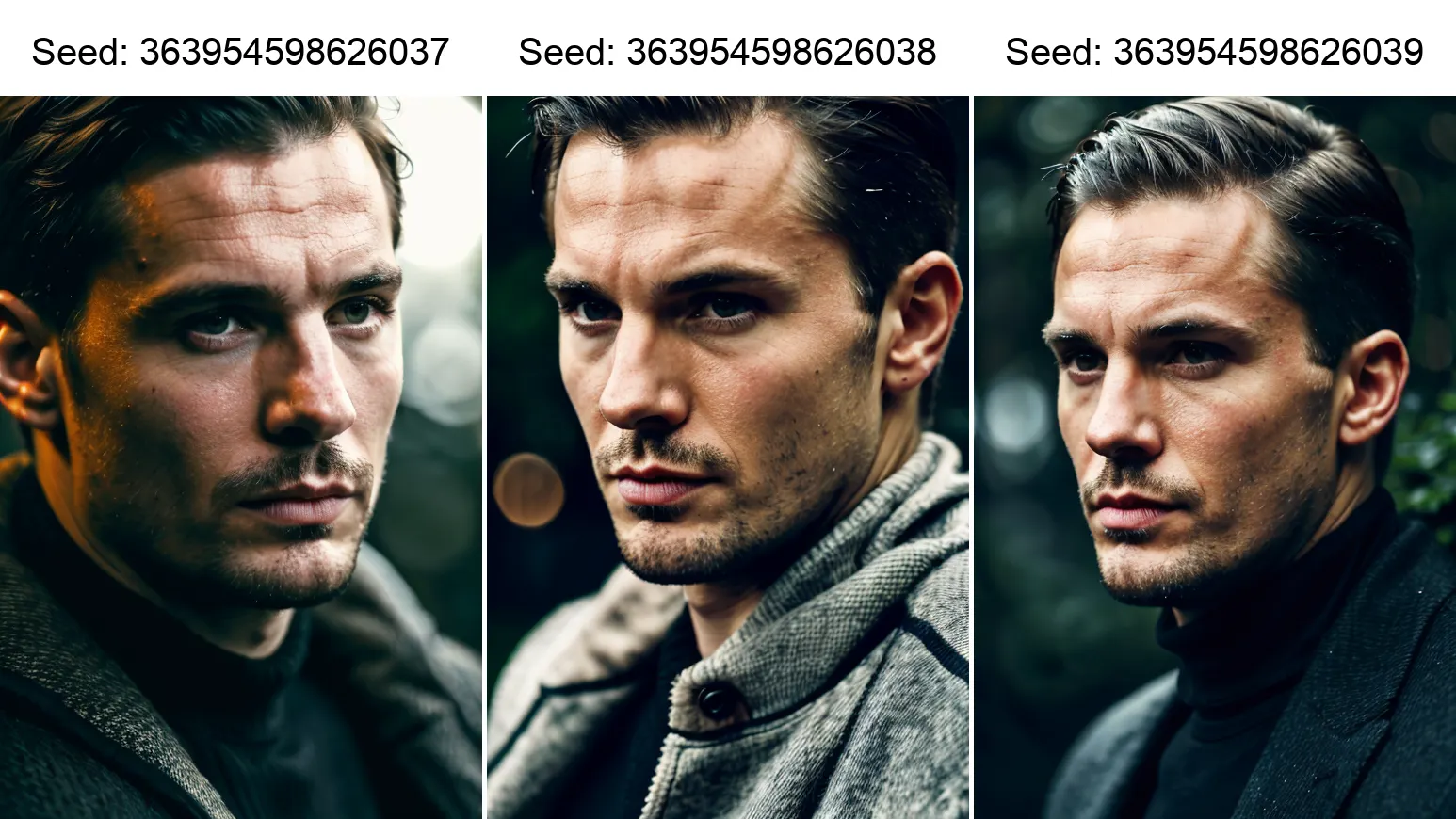

3. Hello World XL v6.0

The generic model HelloWorld XL v6.0 offers a unique approach to image creation using GPT4v tagging. It may take some getting used to, but the results are worth the effort.

This model is particularly good at conveying the analog aesthetic that is often missing from AI-generated images. It also handles body proportions, imperfections, and lighting well. However, it is different at its core from other SDXL models, so you may need to adjust your prompts and tags to get the best results.

For comparison, here is a similar generation using GPT4v tagging with a positive prompt: Film aesthetic, professional photography, close-up portrait photo of a white man wearing a black sweater, serious face, in nature, gloomy and cloudy weather, wearing a black fleece sweater, deep atmospheric, cinematic quality, influence of analog photography Hint for.

Honorable mentions for SDXL include PhotoPedia XL, Realism Engine SDXL, and the deprecated Fully Real XL.

Expert tips for surreal images

Whichever model you choose, here are some expert tips to help you achieve impressive, life-like results.

-

Experiment Embedding: To improve the aesthetics of your images, try using embeddings recommended by model authors or popular embeddings such as BadDream, UnrealisticDream, FastNegativeV2, and JuggernautNegative-neg. There are also embeddings available for specific features such as hands, eyes, and specific features.

-

Embrace the power of LoRA: Although omitted here, these handy tools can help you add detail to your images, adjust lighting, and enhance skin texture. There are many LoRAs available, so don’t be afraid to experiment and find the one that works best for you.

-

use face detailing Extension Tools: These features allow you to achieve great results on faces and hands, making your images more convincing. The Adetailer extension is available in A1111 and the Face Detailer Pipe node is available in ComfyUI.

-

Get creative control net: If you’re a perfectionist when it comes to your hands, ControlNets can help you achieve perfect results. There is also ControlNet available for other features like face or body, so don’t be afraid to experiment and find what works best for you.

If you need help getting started, read our Stable Diffusion guide.

Resources referenced in this guide include:

SD1.5 model:

SDXL Model:

Embedding:

We hope you find this tour of the Stable Diffusion tool helpful as you explore AI-generated images and art. Happy creating!

Edited by Ryan Ozawa.

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.