It’s been a good week for open source AI.

On Wednesday, Meta announced an upgrade to Llama 3.2, a state-of-the-art large language model that sees more than just what it says.

What’s more interesting is that some versions can fit into smartphones without compromising quality. This means you can use private, local AI interactions, apps, and custom features without sending your data to a third-party server.

Llama 3.2, unveiled Wednesday during Meta Connect, comes in four versions, each offering different effects. Strong contenders, the 11B and 90B parametric models, use both text and image processing capabilities to build muscle.

You can handle complex tasks like analyzing charts, adding image captions, and finding objects in photos based on natural language descriptions.

Llama 3.2 was released the same week as the Allen Institute’s Molmo, which claims to be the best open-source multimodal vision LLM on synthetic benchmarks and tested on par with GPT-4o, Claude 3.5 Sonnet, and Reka Core.

Zuckerberg’s company also introduced two new flyweight champions: efficiency, speed, and a pair of 1B and 3B parameter models designed for limited but repetitive tasks that don’t require too much computation.

This little model is a multilingual text expert who is good at “tool calling”. This means better integration with programming tools. Despite its small size, like GPT4o and other powerful models, it boasts an impressive 128K token context window, making it ideal for on-device summarizing, following instructions, and rewriting tasks.

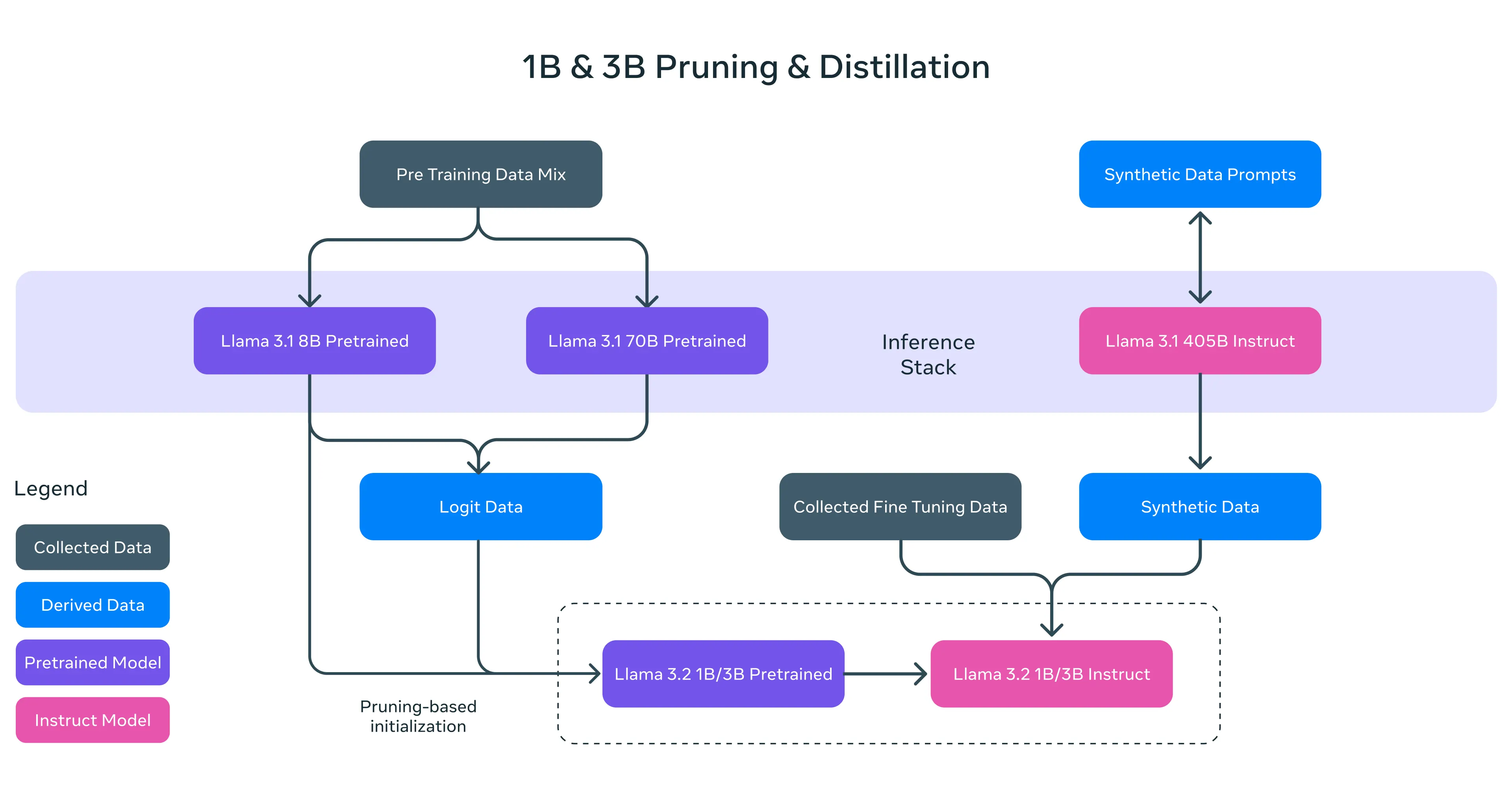

Meta’s engineering team performed some serious digital gymnastics to make this happen. First, they used structured pruning to prune unnecessary data from the larger model, then used knowledge distillation (transferring knowledge from the large model to a smaller model) to squeeze out additional smarts.

The result is a compact set of models that outperform their competitors across a variety of benchmarks in their weight class, including models including Google’s Gemma 2 2.6B and Microsoft’s Phi-2 2.7B.

Meta is also working hard to strengthen its on-device AI. They’ve partnered with hardware giants Qualcomm, MediaTek, and Arm to ensure Llama 3.2 works well with mobile chips from day one. Cloud computing giants are no exception. AWS, Google Cloud, Microsoft Azure, and many others are providing immediate access to new models on their platforms.

Under the hood, Llama 3.2’s vision capabilities come from clever architectural tweaks. Meta’s engineers created a bridge between pre-trained image encoders and text processing cores by applying adapter weights to existing language models.

This means that the model’s vision capabilities do not sacrifice text processing power, so users can expect similar or better text results when compared to Llama 3.1.

The Llama 3.2 release is open source, at least by Meta’s standards. Meta is making models available for download through Llama.com and Hugging Face, as well as its extensive partner ecosystem.

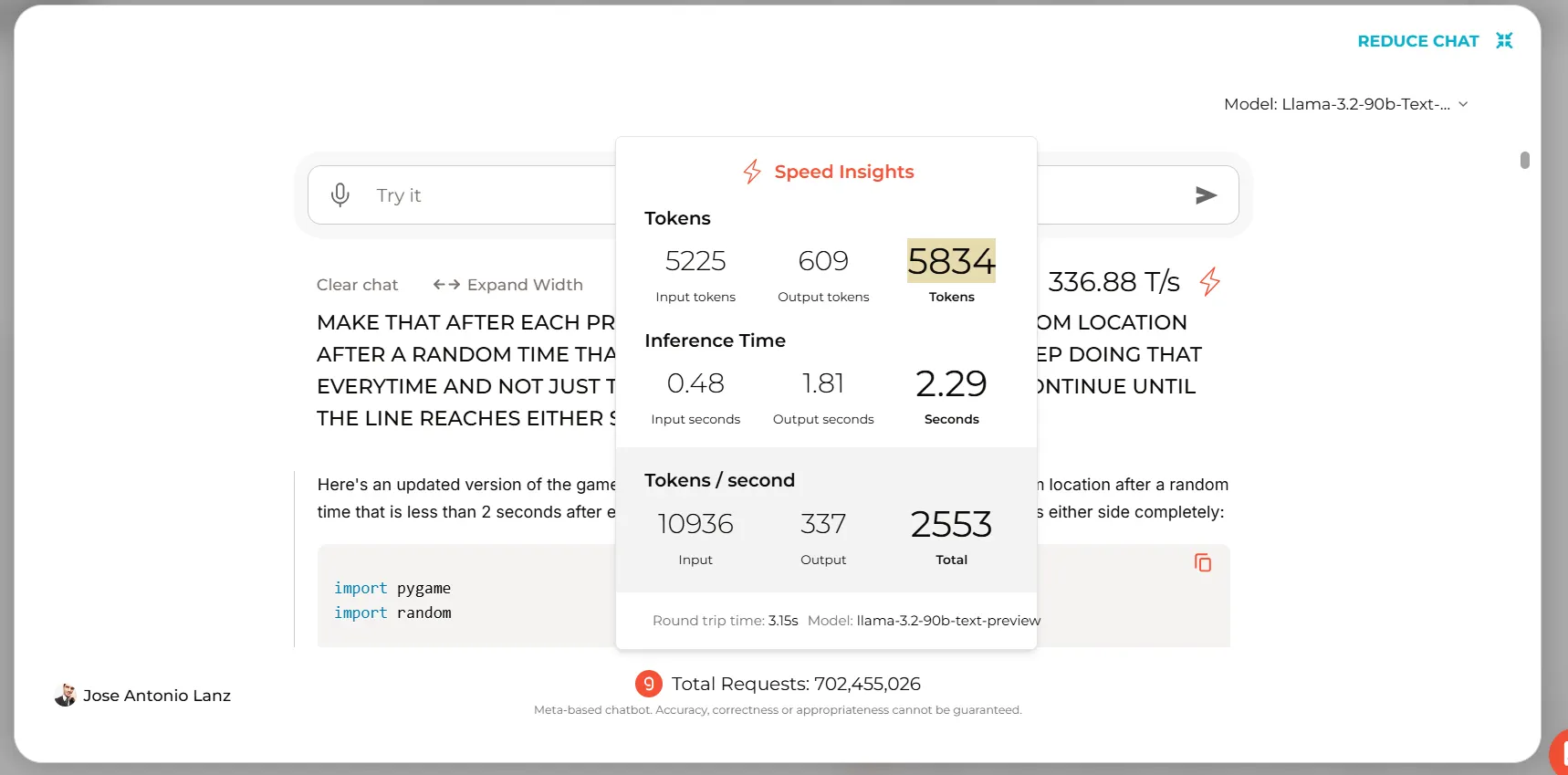

Those interested in running it in the cloud can use their own Google Collab Notebook or use Groq for text-based interactions to generate nearly 5000 tokens in under 3 seconds.

riding a llama

We’ve been trying out Llama 3.2, quickly testing its features across a variety of tasks.

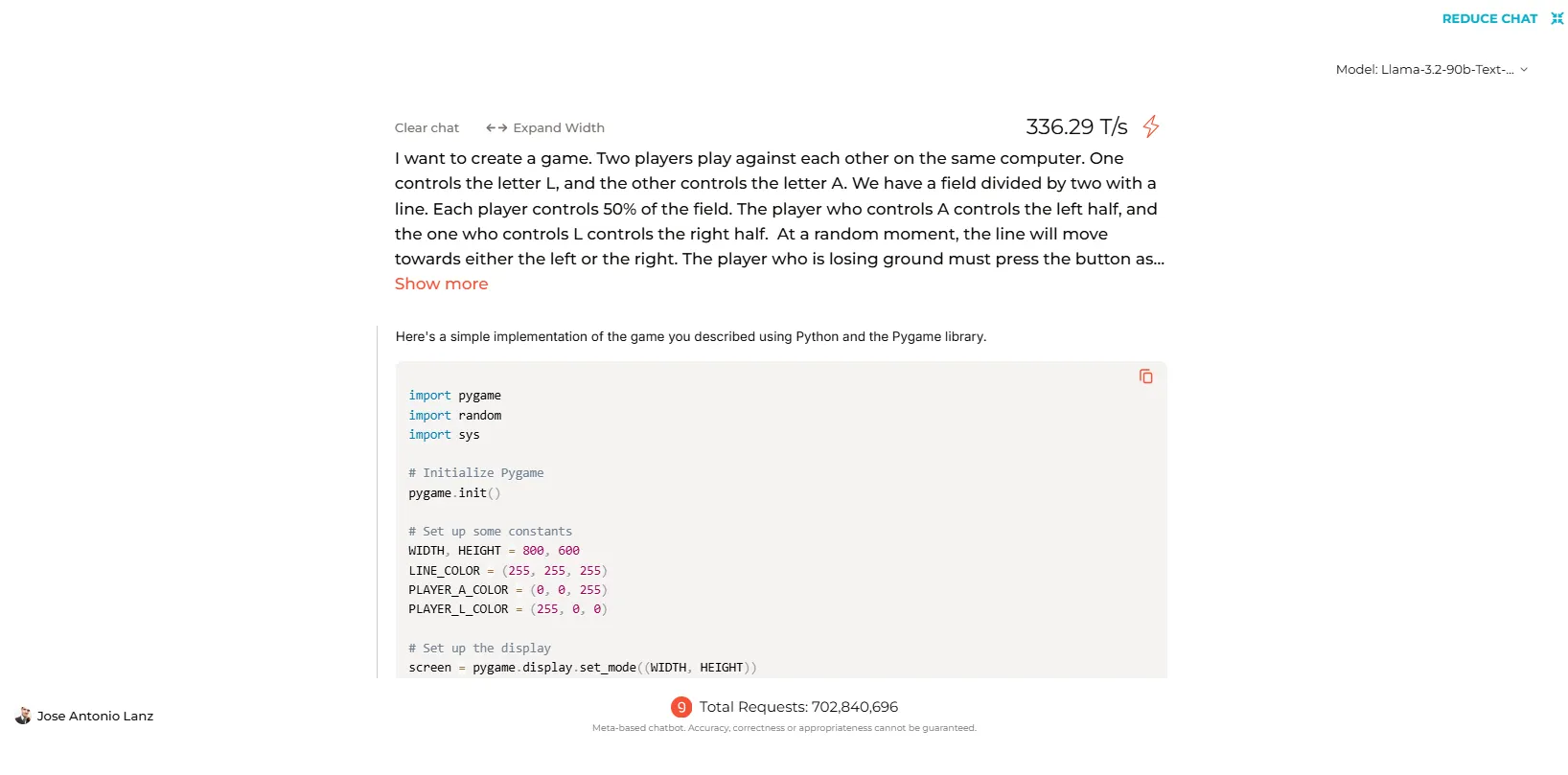

In text-based interactions, the model performs on par with previous models. However, coding skills have yielded mixed results.

When tested on the Groq platform, Llama 3.2 successfully generated code for popular games and simple programs. However, the smaller 70B model presented problems when we were asked to generate functional code for a custom game we had designed. However, the more powerful 90B was much more efficient and produced a functional game on the first try.

Click this link to see the full code generated for Llama-3.2 and all other models we tested.

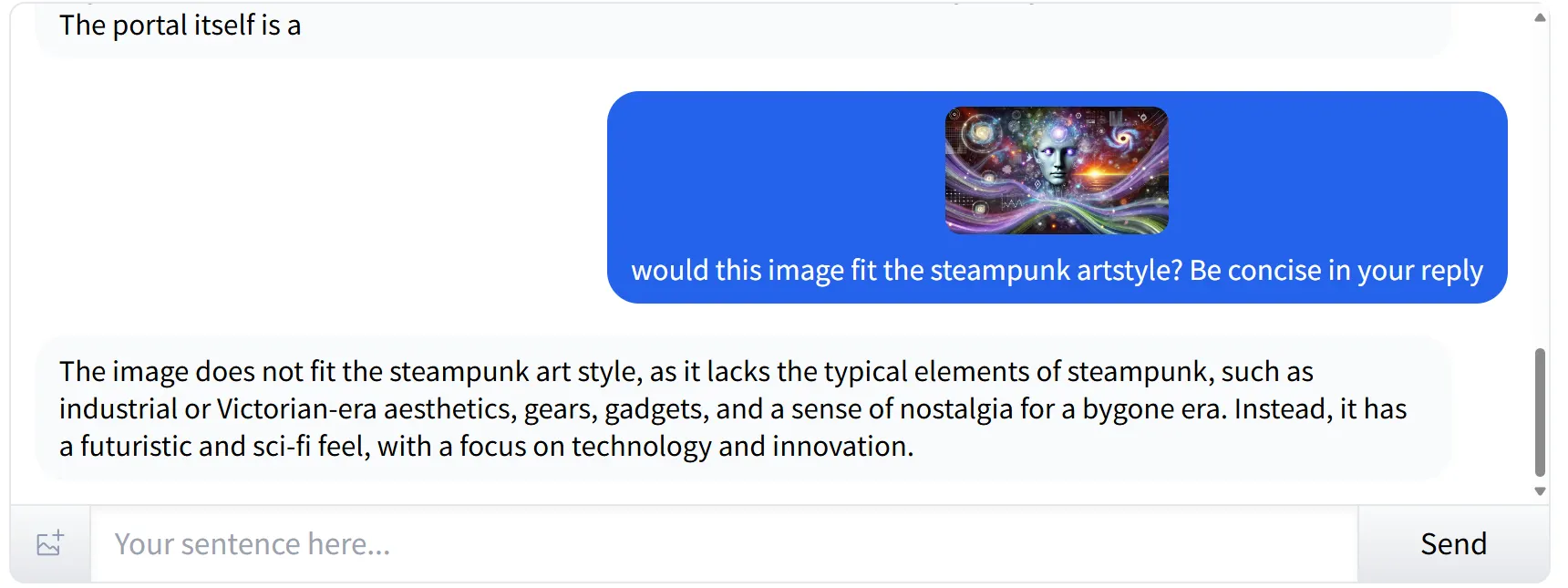

Identifying style and subjective elements in images

Llama 3.2 excels at identifying subjective elements in images. When presented with an image of a futuristic cyberpunk style and asked whether it fit the steampunk aesthetic, the model correctly identified the style and its elements. A satisfactory explanation was provided, pointing out that the image did not fit because it was missing key elements related to the steampunk genre.

Chart analysis (and SD image recognition)

Chart analysis is another powerful feature in Llama 3.2, but requires high-resolution images for optimal performance. Inputting a screenshot with a chart that other models like Molmo or Reka could interpret weakened Llama’s vision capabilities. The model apologized, explaining that the text could not be read properly due to image quality issues.

Text in image identification

Llama 3.2 struggled with small text in charts, but worked perfectly when reading text on large images. We showed a presentation slide introducing a person, and the model successfully understood the context, distinguishing between names and jobs without error.

verdict

Overall, Llama 3.2 is a significant improvement over the previous generation and a great addition to the open source AI industry. Its strengths lie in image interpretation and large text recognition, but there are some areas for potential improvement, particularly in handling low-quality images and complex custom coding tasks.

The promise of on-device compatibility is also good for the future of personal and local AI work, and is a great counterweight to closing offerings like the Gemini Nano and Apple’s proprietary models.

Editors: Josh Quittner and Sebastian Sinclair

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.