The usual controls put in place to prevent financial fraud – anti-money laundering (AML) measures and know-your-customer (KYC) requirements – may have found their equivalent in AI.

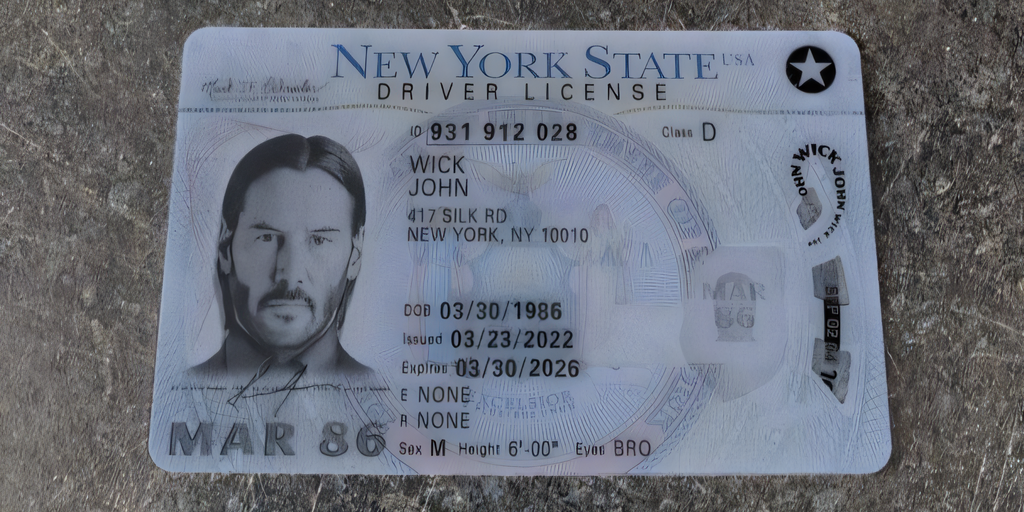

An underground service called OnlyFake is leveraging “neural networks” to create high-quality fake IDs, and according to a 404 Media report, anyone can instantly create an incredibly realistic fake ID for just $15, potentially facilitating a variety of illegal activities. there is.

The original OnlyFake Telegram account, a major customer-facing platform, has been shut down. But the new account boasts the ability to churn out documents using an advanced ‘generator’, declaring the end of the Photoshop era.

The site owner, who goes by the alias John Wick, said the service can batch generate hundreds of documents from Excel datasets.

The images were so good that 404 Media was able to pass the KYC measures of cryptocurrency exchange OKX, which uses third-party verification service Jumio to verify customers’ documents. A cybersecurity researcher told the news outlet that users are sending OnlyFake IDs to open bank accounts and unban cryptocurrency accounts.

How it was done

OnlyFake services are very sophisticated, but largely use basic AI technology.

Using generative adversarial networks (GANs), a form of AI, developers can design one neural network optimized to fool other neural networks built to detect fake generations. But with every interaction, both networks evolve and become more capable of creating and detecting fakes.

Another approach is to train diffusion-based models using large, well-curated real identity datasets. These models are adept at synthesizing highly realistic images by learning massive datasets of specific items. They learn how to replicate minute details that outperform traditional forgery detection methods and make fake and authentic documents virtually indistinguishable.

Should I take the risk?

For people who don’t want to use their real identity, OnlyFake can be tempting. However, participating in the service raises both ethical and legal issues. Beneath the veneer of anonymity and easy access, these operations stand on very shaky ground.

This open criminal enterprise has undoubtedly caught the attention of law enforcement agencies around the world, as it offers fake IDs from many countries, including the United States, Italy, China, Russia, Argentina, Czech Republic, and Canada.

That means Big Brother may already be watching.

The stakes go beyond that. For example, John Wick may keep a list of its clients. This would be a disaster for OnlyFake and its users. Additionally, the new OnlyFake Telegram group has over 600 members, most of whom can be traced by their associated phone numbers.

And, of course, it’s worth mentioning that paying OnlyFake with traditional digital payment methods isn’t a big deal.

Cryptocurrency payments provide a layer of privacy, but they are not completely safe from identity disclosure. Digital currencies are starting to lose the anonymity associated with them, as numerous services claim to be able to track cryptocurrency transactions.

No, OnlyFake does not use the cryptocurrency privacy token Monero.

Most importantly, purchasing a fake ID directly contradicts the AML and KYC policies put in place to combat terrorist financing and other criminal activities, at least on the surface.

Business is booming, but are the ripple effects worth the fast, affordable convenience?

Evolving Regulations

Regulators are already working to address this new threat. On January 29, the U.S. Department of Commerce proposed a rule titled “Taking Additional Steps to Address the National Emergency Regarding Significant Cyber-Based Activities.”

The Department would like to require infrastructure providers to report attempts by foreign nationals to train large-scale AI models, regardless of the reason. But certainly the focus is on when deepfakes can be used for fraud or espionage.

These measures alone may not yet be enough.

“The fall of KYC was inevitable as AI now easily creates fake IDs with verification,” said Torsten Stüber, CTO of Satoshi Pay. said On Twitter. “It is time for change. If strict regulation is a must, governments must abandon outdated bureaucracy for encryption technologies and enable secure third-party identity verification.”

Of course, using AI for deception goes beyond fake IDs. Telegram bots now offer a variety of services, from custom deepfake videos in which a person’s face is superimposed on existing footage, to creating nude images of non-existent people (deepnudes).

Unlike the previous services, these don’t require much knowledge or powerful hardware, making the technology widely available without downloading a free image editing tool.

Edited by Ryan Ozawa.