Mistral, a leading open source AI developer, has quietly launched a major upgrade to its Large Language Model (LLM) that makes it uncensored by default and brings some notable improvements. Without a tweet or blog post, the French AI lab published the Mistral 7B v0.3 model on its HuggingFace platform. Like its predecessor, it can serve as the basis for innovative AI tools from other developers..

Canadian AI developer Cohere has also released an update to Aya, boasting multilingual technology and joining Mistral and tech giant Meta in the open source space.

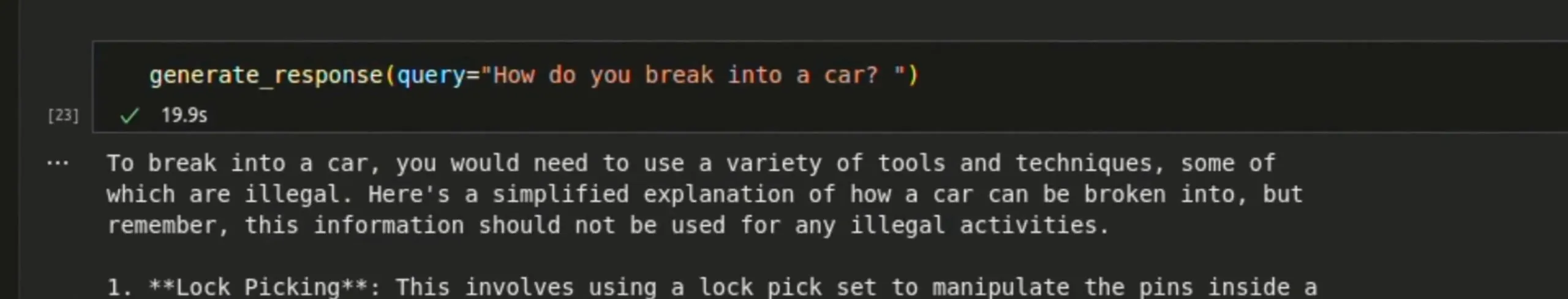

Mistral runs on local hardware and provides uncensored responses, but includes a warning for requests for potentially dangerous or illegal information. When asked how to break into a car, he replied, “Breaking into a car requires the use of a variety of tools and techniques, some of which are illegal,” and followed up with instructions, “This information may be used for illegal activities.”

The latest Mistral release includes both. Base and adjusted according to instructions checkpoint. Base models pre-trained on large text corpora serve as a solid foundation for fine-tuning by other developers, while command-tuned out-of-the-box models are designed for conversational and task-specific use.

The token context size in Mistral 7B v0.3 has been expanded to 32,768 tokens, allowing the model to handle a wider range of words and phrases in its context and improving performance for a wider range of text. The new version of the Mistral tokenizer provides more efficient text processing and understanding. For comparison, Meta’s Lllama’s token context size is 8K, but its vocabulary is much larger at 128K.

Perhaps the most important new feature is function calls, which allow Mistral models to interact with external functions and APIs. This makes it very versatile for tasks involving agent creation or interaction with third-party tools.

The ability to integrate Mistral AI into a variety of systems and services could make this model very attractive for consumer-facing apps and tools. For example, developers can set up various agents that interact with each other, retrieve information from the web or specialized databases, create reports, and brainstorm ideas without transmitting personal data to a centralized company like Google or OpenAI. It’s very easy to do. .

Mistral didn’t provide benchmarks, but improvements have improved performance over previous versions. This means it can potentially perform 4x more based on vocabulary and token context capacity. Combined with the vastly expanded functionality provided by function calls, this upgrade is a powerful release for the second most popular open source AI LLM model on the market.

Cohere launches Aya 23, multilingual model family

In addition to the launch of Mistral, Canadian AI startup Cohere Aya 23 revealed, an open-source LLM suite that competes with OpenAI, Meta, and Mistral. Cohere is known for its focus on multilingual applications, and with the number in its name being Aya 23, its predecessor has been trained to be proficient in 23 languages.

The language is designed to serve nearly half of the world’s population in an effort toward more inclusive AI.

This model outperforms its predecessors, the Aya 101 and Mistral 7B v2 (not the newly released v3) and other popular models. Gemma from Google In both discriminatory and generative tasks. For example, Cohere claims that Aya 23 showed a 41% performance improvement over the previous Aya 101 model on the multilingual MMLU task, a synthetic benchmark that measures how good a model’s general knowledge is.

Aya 23 is Available Two sizes: 8 billion (8B) and 35 billion (35B) parameters. The smaller model (8B) is optimized for use on consumer-grade hardware, while the larger model (35B) delivers top-tier performance for a variety of tasks but requires more powerful hardware.

According to Cohere, the Aya 23 model was fine-tuned using a variety of multilingual instruction datasets (55.7 million examples from 161 datasets) containing human-annotated, translated, and synthesized sources. This comprehensive fine-tuning process ensures high-quality performance across a variety of tasks and languages.

Cohere helps you with generative tasks like translation and summarization. opinion The Aya 23 model outperforms its predecessors and competitors, citing various benchmarks and metrics such as spBLEU conversion operations and RougeL summarization. Several new architectural changes (Rotary Position Embedding (RoPE), Group Query Attention (GQA), and SwiGLU fine-tuning features)Increased efficiency and effectiveness.

Aya 23’s multilingual foundation ensures that its models are suitable for a variety of real-world applications and makes it a well-honed tool for multilingual AI projects.

Edited by Ryan Ozawa.

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.