Verified, staked on eth2: #5 – Why client diversity is important

*disclaimer: None of this is meant to be slight to any particular customer. Each client and even specification is likely to have its own oversights and bugs. Eth2 is a complex protocol and the only people implementing it are humans. The point of this article is to highlight how and why risks are mitigated.*

With the launch of the Medalla testnet, people were encouraged to experiment with different clients. And from the beginning we saw why. Nimbus and Lodestar nodes were unable to handle the workload of the entire testnet and became stuck. (0)(One) As a result, Medalla failed to finish in the first 30 minutes of its existence.

On August 14th, the Prysm node lost track of time when one of the time servers it was using as a reference suddenly jumped into the future. These nodes then began creating and proving blocks as if they were in the future. We found that when the clocks of these nodes were modified (either by updating clients or by having the timeserver return to the correct time), the stakes of nodes that had disabled default slashing protection were reduced.

Exactly what happened is a bit more nuanced. I highly recommend reading it. Raul Jordan’s Case History.

Clock failure – worse

The moment Prysm nodes started time traveling, they comprised ~62% of the network. This means that the block finalization threshold (>2/3 in one chain) cannot be met. What’s worse is that these nodes can’t find the chain they expect (there was a 4 hour “gap” in the history and they all jumped to slightly different times). So, as you guessed, they flooded the network with short forks. “Missing” data.

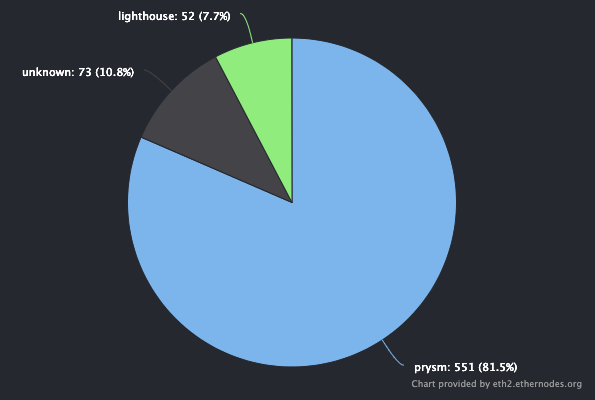

Prysm currently holds 82% of Medalla nodes 😳 ! (ethernodes.org)

At this point, the network was flooded with thousands of guesses about what the head of the chain was, and all clients began to struggle with the increased workload of trying to figure out which chain was correct. This made the problem worse by causing nodes to fall behind, require synchronization, run out of memory, and other forms of confusion.

Ultimately, this was a good thing because it not only allowed us to resolve fundamental issues with clocks, but also allowed us to stress test our clients under conditions of large-scale node failures and network load. That said, this failure didn’t have to be that extreme, and in this case it was Prysm’s dominance that was the cause.

Ceiling Decentralization – Part 1, Good for eth2

As discussed previously, 1/3 is the magic number for secure asynchronous BFT algorithms. If more than 1/3 of the validators are offline, no more epochs can be completed. Therefore, while the chain continues to grow, it is no longer possible to point to a block and guarantee that that block will remain part of the standard chain.

Shilling Decentralization – Part 2, It’s Good for You

Validators are incentivized to do as much good for the network as possible, and are not trusted to do something simply because it is the right thing to do.

If more than 1/3 of the nodes are offline, the penalty for offline nodes starts to increase. This is called an inactivity penalty.

As a verifier We want to make sure that if something causes a node to go offline, it’s highly unlikely that many other nodes will go offline at the same time.

The same goes for getting cut. There is always the possibility of a validator being deleted due to specification or software mistakes/bugs, but the penalty for a single deletion is “only” 1 ETH.

However, if many validators are removed simultaneously, the penalty goes up to 32 ETH. The point at which this happens is again the magic 1/3 threshold. (An explanation of why this may be can be found here.).

These incentives are called active anti-correlation and safe anti-correlation respectively and are very intentional aspects of the eth2 design. The anti-correlation mechanism incentivizes validators to make decisions that are in the best interest of the network by linking individual penalties to how much influence each validator has on the network.

Shilling Decentralization – Part 3, Numbers

Eth2 is being implemented by many independent teams, each developing an independent client based on: specification It was mainly written by the eth2 research team. This ensures that there are multiple beacon node and validator client implementations, each making different decisions about the technologies, languages, optimizations, trade-offs, etc. needed to build an eth2 client. This way, bugs in any layer of the system only affect specific clients running, not the entire network.

In the example of the Prysm Medalla timing bug, if only 20% of eth2 nodes were running Prysm and 85% of people were online, the problem might have been resolved without incurring an inactivity penalty for the Prysm nodes. There will only be some punishment and sleepless nights for the developer.

In contrast, roughly 3500-5000 validators were slashed in a short period of time because so many people were running the same clients (many of them with slashing protection disabled).* High correlation indicates that slashing This means ~16 ETH. Because they were using a popular client.

* At the time of writing, Slash is still pouring in, so final numbers aren’t out yet..

try something new

Now it’s time to experiment with different clients. Find a client that a small number of validators are using (you can tell by looking at their deployment). here). Lighthouse, ocean, cloudand prism It’s all pretty stable for now, though. lodestar We are catching up quickly.

Most importantly, try the new client! As we prepare for our decentralized mainnet, we have the opportunity to create a healthier distribution on Medalla.