Verified, staked on eth2: #6 – Perfection is the enemy of the good.

T'was the day before genesis, when all was prepared, geth was in sync, my beacon node paired. Firewalls configured, VLANs galore, hours of preparation meant nothing ignored.

Then all at once everything went awry, the SSD in my system decided to die. My configs were gone, chain data was history, nothing to do but trust in next day delivery.

I found myself designing backups and redundancies. Complicated systems consumed my fantasies. Thinking further I came to realise: worrying about these kinds of failures was quite unwise.

event

The beacon chain has several mechanisms to incentivize validator behavior, all of which depend on the current state of the network. Therefore, it is important to consider these instances of failure in the larger context of how other validators can fail. It’s not a worthwhile way to secure your nodes.

As an active validator, your balance increases or decreases and never goes sideways*. So a very rational way to maximize your profits is to minimize your downsides. There are three ways in which a beacon chain can reduce your balance:

- punishment Issued when a validator misses one of his missions (e.g. because he is offline).

- inert leak It is passed on to validators who missed their duties while the network was not completing (i.e. if there is a high correlation between your validator being offline and another validator being offline).

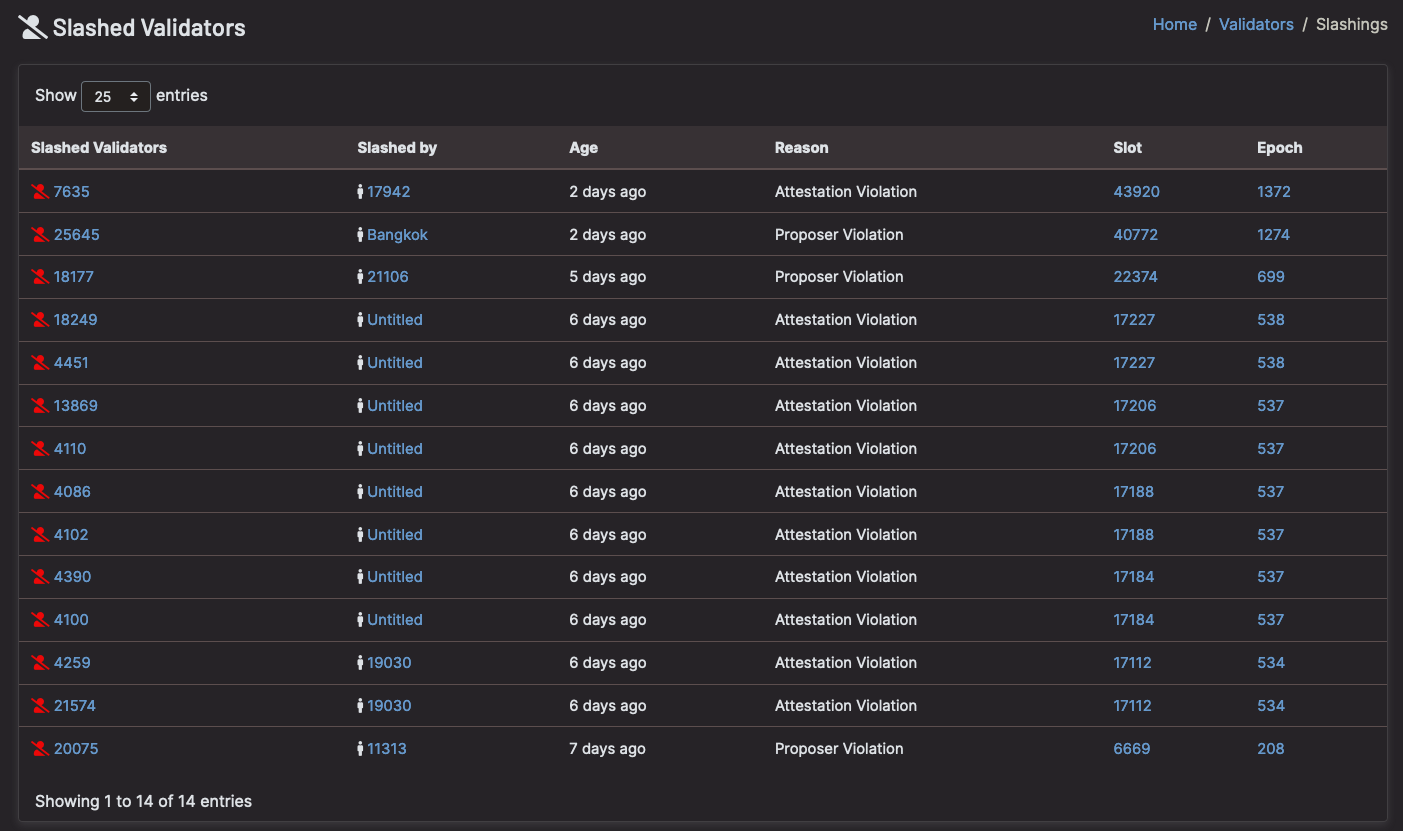

- slashing This is presented to the verifier, which can be used in attacks by generating contradictory blocks or proofs.

* On average, a validator’s balance may remain the same, but they are rewarded or punished for a given task.

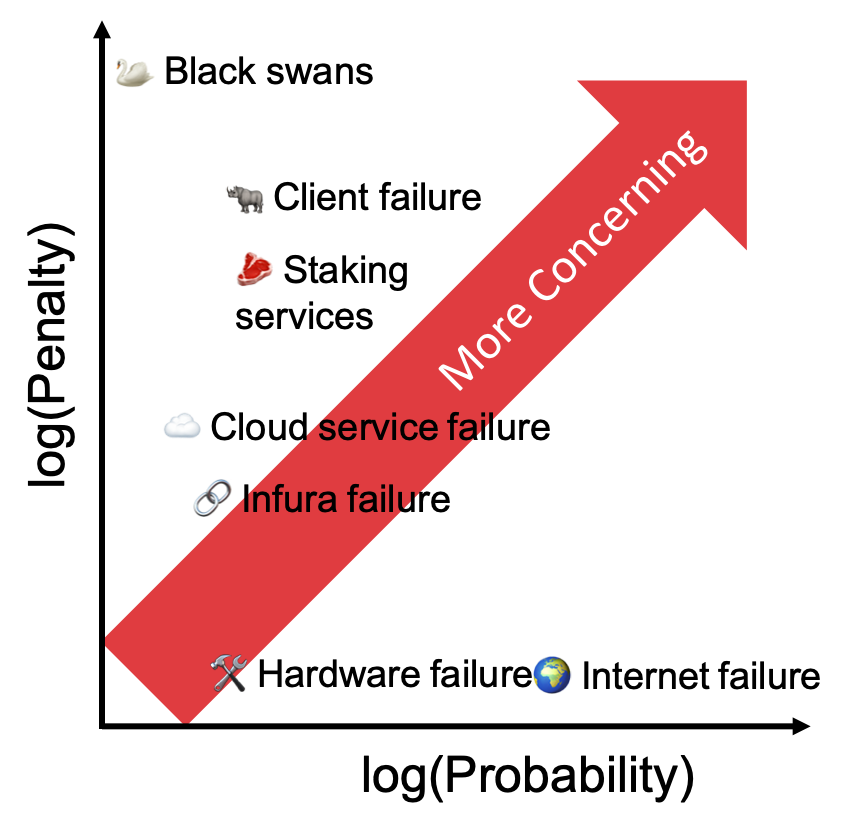

correlation

If a single validator goes offline or performs a slashable action, the impact in terms of the overall health of the beacon chain is small. Therefore, there is no significant punishment. Conversely, if many validators are offline, the balances of offline validators can decrease much faster.

Likewise, if many validators perform slashable operations simultaneously, from the perspective of the beacon chain, this is indistinguishable from an attack. Therefore, this will be treated as such and 100% of the problematic validator’s stake will be burned.

These “anti-correlation” incentives should worry validators. more Talk about failures that can affect others at the same time, rather than about individual problems.

Cause and Probability.

So, let’s examine this through the lens of how many other people will be affected at the same time through a few examples of failures, and how severely validators will be punished.

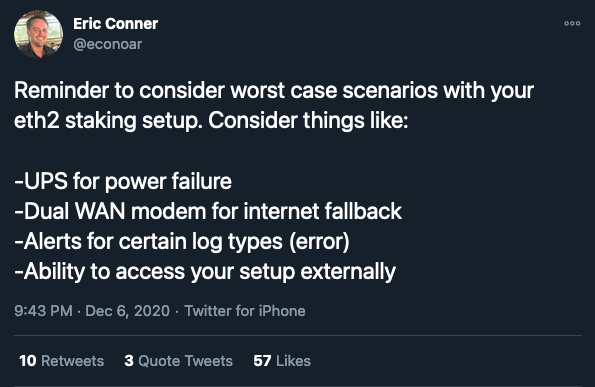

I disagree with @econoar. here these are worst case problem. This is a more intermediate level problem. Home UPS and dual WAN address errors are much lower on the list of concerns as they are not correlated to other users.

🌍Internet/Power Outage

If you’re validating at home, there’s a good chance you’ll run into one of these errors at some point in the future. Residential internet and power connections do not have guaranteed uptime. However, when the internet goes down or there is a power outage, the outage is usually localized and, even then, only for a few hours.

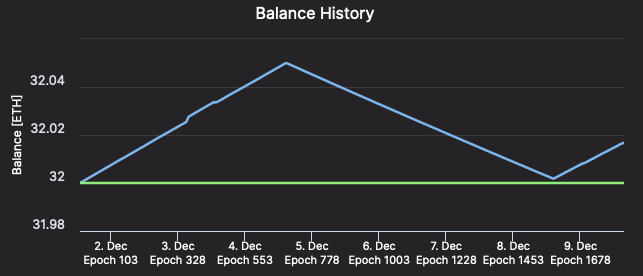

unless you have very If your internet/power is unreliable, it may not be worth paying for a lost connection. You will incur a penalty of a few hours, but since the rest of the network operates normally, the penalty will be roughly equal to the reward received during the same period. in other words, K A one-hour failure will return the validator’s balance to approximately its original level. K A few hours before failure, and K During the additional time, the validator’s balance returns to its pre-failure amount.

(Validator #12661 Get your ETH back as fast as you lost it – Beacon Chine

🛠 Hardware error

Like Internet failures, hardware failures occur randomly and can cause nodes to go down for days. It is important to consider the cost of redundant hardware and the expected rewards over the lifetime of the validator. Is the expected value of the error (offline penalty multiplied by the probability of the error occurring) greater than the cost of redundant hardware?

Personally, the chance of failure is low enough and the cost of fully redundant hardware is high enough that it’s almost certainly not worth it. But again, I’m not a whale 🐳 ; As with all failure scenarios, you need to evaluate how this applies to your specific situation.

☁️ Cloud service failure

Maybe you decide to choose a cloud provider to completely avoid the risk of hardware or Internet failure. Using a cloud provider introduces the risk of correlated errors. The important question is, How many other validators are using the same cloud provider as you?

A week before Genesis, Amazon AWS is out for an extended period of time This affected many parts of the web. If something similar were to happen now, enough validators would go offline at the same time that inactivity penalties would kick in.

Worse, you could be devastated if your cloud provider clones the VM running your node and accidentally leaves the old and new nodes running at the same time (this accidental duplication affects many other nodes). The punishment resulting from this will be particularly severe). do).

If you want to rely solely on your cloud provider, consider switching to a smaller provider. As a result, you can save a lot of ETH.

🥩 Staking service

there is Multiple staking services There are various levels of decentralization on mainnets today, but trusting ETH all increases the risk of correlation failure. These services are essential components of the eth2 ecosystem. It is imperfect because it is designed by humans, especially for those who have less than 32 ETH or do not have the technical know-how about staking.

If your staking pool eventually becomes as large as an eth1 mining pool, you might think that bugs could lead to massive cuts to members or penalties for inactivity.

🔗Infura Failure

Last month Infura went down for 6 hours. Causing disruption across the Ethereum ecosystem. It’s easy to see how this would likely lead to a correlated failure of the eth2 validator.

Third-party eth1 API providers must also limit the rate of calls to their services. In the past, this prevented validators (on the Medella testnet) from producing valid blocks.

The best solution is to run your own eth1 node. Rate limiting does not occur, errors are less likely to be correlated, and decentralization across the network is improved.

Eth2 clients have also started adding the possibility to specify multiple eth1 nodes. This makes it easy to switch to the backup endpoint if the primary endpoint fails (Lighthouse: –eth1-endpointprism: PR#8062Nimbus & Teku will likely add support somewhere in the future).

We recommend adding a backup API option with cheap/free insurance (EthereumNodes.com Shows free and paid API endpoints and their current status. This is useful whether you run your own eth1 node or not.

🦏 Failure of certain eth2 clients

Despite all the code reviews, audits, and Rockstar work, every eth2 client has a bug lurking somewhere. Most of them are minor and will be caught before they cause major problems in production. However, there is always a chance that the client you choose will go offline or you will get cut. If this happens, you don’t want to have clients running on more than 1/3 of the nodes in your network.

You have to balance what you think are your best clients with the popularity of those clients. If you experience problems with your node, read the documentation for the other clients so you know what to expect in terms of installing and configuring them.

If you have a lot of ETH at stake, it may be worth running multiple clients, each using a portion of ETH, to avoid putting all your eggs in one basket. Otherwise, Guarantee An interesting proposal for a multi-node staking infrastructure. Secret Share Validator We are seeing rapid progress.

🦢 black swan

Of course, there are many improbable and unpredictable but risky scenarios that always pose a risk. Scenarios outside of the obvious decision to set up staking. An example like this: Specter and collapse Hardware level or kernel bugs such as: bleeding teeth This suggests some risk that exists across the entire hardware stack. By definition, it is impossible to fully predict and prevent these problems, and we typically have to react after the fact.

what to worry about

Ultimately, it comes down to calculating expected value. Prior) For a given failure: how likely is it that the event will occur, and if it does, what will be the punishment? It is important to consider these failures in the context of the rest of the eth2 network, as correlation will have a huge impact on the penalties faced. Comparing the expected cost of a failure with the cost of mitigating it can give you a reasonable answer as to whether it is worth facing.

No one can know all the ways a node can fail or how likely each failure is, but by separately estimating the likelihood of each failure type and mitigating the greatest risk, the “wisdom of crowds” wins and the network, on average, succeeds. It will. I would give it a good rating overall. Moreover, because each validator faces different risks and has different estimates of risk, unexplained failures will be caught by different validators, reducing the degree of correlation. Ah, decentralization!

📕 Don’t panic

Lastly, if something happens to your node, don’t panic! Even during inactive leaks, the penalty is small on short time scales. Take a moment to think about what happened and why. Then create an action plan to solve the problem. Then take a deep breath before continuing. An extra five minutes of penalty is better than being flogged for a hasty indiscretion.

Above all: 🚨 Never run two nodes with the same validator key! 🚨

Thanks to Danny Ryan, Joseph Schweitzer, and Sacha Yves Saint-Leger for their reviews.

(slashing because the verifier ran more than 1 node – Beacon Chine)